Chapter 4 Natural Experiments

Natural Experiments are situations due to the natural course of events that approximate the conditions of a randomized controlled trial. In the economists’ toolkit, we generally make a distinction between:

- Instrumental variables (IV), that rely on finding a plausibly exogeneous source of variation in treatment intake.

- Regression Discontinuity Designs (RDD), that exploit a discontinuity in the eligibility to the treatment.

- Difference In Differences (DID), that make use of the differential exposure of some groups to the treatment of interest over time.

Remark. The term Natural Experiments seems to be mostly used by economists. It dates back to Haavelmo (1944)’s paper on the Probability Approach to Econometrics, where he makes a distinction between the experiments we’d like to make as social scientists and the experiments that Nature provides us with, that are in general a subset of the experiments we’d like to make. This raises the question of our ability to identify the relationships of interest from the variation that is present in the data, a traditional problem in classical econometrics that has echoes in treatment effect estimation, where we also try to identify treatment effect parameters. At the time of Haavelmo, and until the beginning of the 1990s, there was no real discussion of the plausibility of the identifying assumptions (or restrictions) required for identification of certain relations, outside of a discussion of their theoretical plausiblility. With the credibility revolution brought about by Angrist (1990)’s paper and summarized in Angrist and Krueger (2001)’s review paper, the notion of natural experiment made a come back, with the idea that we might be able to look for specific set of events produced by Nature that more credibly identify a relationship of interest, i.e. that closely approximate true experimental conditions.

Remark. Outside of economics, Natural Experiments have also flourished, but without the term, and were compiled in the early textbook on research methods by Campbell (1966). Both Difference In Differences and Regression Discontinuity Designs have been actually developed outside of economics, mostly in education research. Instrumental Variables have had a separate history in economics and in genetics, were it is called the method of path coefficients.

4.1 Instrumental Variables

Instrumental Variables rely on finding a plausibly exogeneous source of variation in treatment intake. In the simple case of a binary instrument, the identification and estimation parts are actually identical to Encouragements designs in RCTs, that we have already studied in Section 3.4. As a consequence, unless we make very strong assumptions, an IV design is going to recover a Local Average Treatment Effect. Our classical assumptions are going to show up again: Independence, Exclusion Restriction, Monotonicity.

Remark. Examples of Instrumental Variables are:

- Distance to college or to school for studying the impact of college or school enrollement on education, earnings and other outcomes.

- Random draft lottery number for investigating the impact of military experience on earnings and other outcomes.

- Randomized encouragement to participate in order to study the impact of a program.

Remark. The crucial part of an IV design is to justify the credibility of the exclusion restriction and independence assumptions. It is in general very difficult to justify these assumptions, especially the exclusion restriction assumption. In the examples above, one could argue that schools or colleges might be built where they are necessary, i.e. close to destitute populations, or, on the contrary, that they are built far from difficult neighbourhoods. As soon as distance to school becomes correlated with other determinants of schooling, such as parental income and education, the independence assumption is violated.

Even if school placement is truly independent of potential education and earnings outcomes at first, parents, by choosing where to live, will sort themselves such as the parents that pay more attention to education end up located closer to school. As a consequence, the independence assumption might be violated again.

Even when the instrument is truly random, such as a draft lottery number, and thus the independence assumption seems fine, the instrument may directly affect the outcomes by other ways than the treatment of interest. For example, receiving a low draft lottery number makes one more likely to be drafted. In response, one might decide to increase their length of stay in college in order to use the waiver for the draft reserved for students. If receiving a low draft lottery number increases the number of years of education, and in turn subsequent earnings, then the exclusion restriction assumption is violated.

In this section, I’m going to denote \(Z_i\) a binary instrument that can either take value \(0\) or \(1\). In general, we try to reserve the value \(1\) for the instrument value that increases participation in the treatment of interest. In our examples, that would be when for example, the distance to college is low, the draft lottery number is low, or someone receives an encouragement to enter a program.

4.1.1 An example where Monotonicity does not hold

Since Monotonicity is going to play such a particular role, and since we have already explored this assumption a little in Chapter 3, I am going to use as an example a model where the Monotonicity assumption actually does not hold. It will, I hope, help us understand better the way Monotonicity works and how it interacts with the other assumptions. The key component of the model that makes Monotonicity necessary is the fact that treatment effects are heterogeneous and correlated with participation in the treatment. We’ll see later that Monotonicity is unnecessary when treatment effects are orthogonal to take up.

Example 4.1 Let’s see how we can generate a model without Monotonicity:

\[\begin{align*} y_i^1 & = y_i^0+\bar{\alpha}+\theta\mu_i+\eta_i \\ y_i^0 & = \mu_i+\delta+U_i^0 \\ U_i^0 & = \rho U_i^B+\epsilon_i \\ y_i^B & =\mu_i+U_i^B \\ U_i^B & \sim\mathcal{N}(0,\sigma^2_{U}) \\ D_i & = \uns{y_i^B+\kappa_i Z_i + V_i\leq\bar{y}} \\ \kappa_i & = \begin{cases} -\bar{\kappa} & \text{ if } \xi_i = 1 \\ \underline{\kappa} & \text{ if } \xi_i = 0 \end{cases} \\ \xi & \sim\mathcal{B}(p_{\xi}) \\ V_i & = \gamma(\mu_i-\bar{\mu}) + \omega_i \\ (\eta_i,\omega_i) & \sim\mathcal{N}(0,0,\sigma^2_{\eta},\sigma^2_{\omega},\rho_{\eta,\omega}) \\ Z_i & \sim\mathcal{B}(p_Z) \\ Z_i & \Ind (y_i^0,y_i^1,y_i^B,V_i) \\ \xi_i & \Ind (y_i^0,y_i^1,y_i^B,V_i,Z_i) \end{align*}\]

The key component of the model that generates a failure of Monotonicity is the coefficient \(\kappa_i\), that determines how individuals’ participation into the program reacts to the instrument \(Z_i\). \(\kappa_i\) is a coefficient whose value varies accross the population. In my simplified model, \(\kappa_i\) can take only two values, \(-\bar{\kappa}\) or \(\underline{\kappa}\). When \(-\bar{\kappa}\) and \(\underline{\kappa}\) have opposite signs (let’s say \(-\bar{\kappa}<0\) and \(\underline{\kappa}>0\)), then individuals with \(\kappa_i=-\bar{\kappa}\) are going to be more likely to enter the program when they receive an encouragement (when \(Z_i=1\)) while individuals with \(\kappa_i=\underline{\kappa}\) will be less likely to enter the program when \(Z_i=1\). When \(-\bar{\kappa}\) and \(\underline{\kappa}\) have different signs, we have four types of reactions when the instrumental variable moves from \(Z_i=0\) to \(Z_i=1\), holding everything else constant. These four types of reactions define four types of individuals:

- Always takers (\(T_i=a\)): individuals that participate in the program both when \(Z_i=0\) and \(Z_i=1\).

- Never takers (\(T_i=n\)): individuals that do not participate in the program both when \(Z_i=0\) and \(Z_i=1\).

- Compliers (\(T_i=c\)): individuals that do not participate in the program when \(Z_i=0\) but that participate in the program when \(Z_i=1\) .

- Defiers (\(T_i=d\)): individuals that participate in the program when \(Z_i=0\) but that do not participate in the program when \(Z_i=1\) .

In our model, these four types are a function of \(y_i^B+V_i\) and \(\kappa_i\). In order to see this let’s define, as in Section 3.4, \(D^z_i\) the participation decision of individual \(i\) when the instrument is exogenously set to \(Z_i=z\), with \(z\in\left\{0,1\right\}\). When \(\kappa_i=-\bar{\kappa}<0\), we have three types of reactions to the instrument. It turns out that each of type can be defined by where \(y_i^B+V_i\) lies with respect to a series of thresholds:

- Always takers (\(T_i=a\)) are such that \(D^1_i=\uns{y_i^B-\bar{\kappa} + V_i\leq\bar{y}}=1\) and \(D^0_i=\uns{y_i^B + V_i\leq\bar{y}}=1\), so that they actually are such that: \(y_i^B+V_i\leq\bar{y}\). This is because \(y_i^B+V_i\leq\bar{y} \Rightarrow y_i^B+V_i\leq\bar{y}+\bar{\kappa}\), when \(\bar{\kappa}>0\).

- Never takers (\(T_i=n\)) are such that \(D^1_i=\uns{y_i^B-\bar{\kappa} + V_i\leq\bar{y}}=0\) and \(D^0_i=\uns{y_i^B + V_i\leq\bar{y}}=0\), so that they actually are such that: \(y_i^B+V_i>\bar{y}+\bar{\kappa}\). This is because \(y_i^B+V_i>\bar{y}+\bar{\kappa} \Rightarrow y_i^B+V_i>\bar{y}\), when \(\bar{\kappa}>0\).

- Compliers (\(T_i=c\)) are such that \(D^1_i=\uns{y_i^B-\bar{\kappa} + V_i\leq\bar{y}}=1\) and \(D^0_i=\uns{y_i^B + V_i\leq\bar{y}}=0\), so that they actually are such that: \(\bar{y}<y_i^B+V_i\leq\bar{y}+\bar{\kappa}\).

When \(\kappa_i=\underline{\kappa}>0\), we have three types defined by where \(V_i\) lies with respect to a series of thresholds:

- Always takers (\(T_i=a\)) are such that \(D^1_i=\uns{y_i^B+\underline{\kappa} + V_i\leq\bar{y}}=1\) and \(D^0_i=\uns{y_i^B + V_i\leq\bar{y}}=1\), so that they actually are such that: \(y_i^B+V_i\leq\bar{y}-\underline{\kappa}\). This is because \(y_i^B+V_i\leq\bar{y}-\underline{\kappa} \Rightarrow y_i^B+V_i\leq\bar{y}\), when \(\underline{\kappa}>0\).

- Never takers (\(T_i=n\)) are such that \(D^1_i=\uns{y_i^B-\bar{\kappa} + V_i\leq\bar{y}}=0\) and \(D^0_i=\uns{y_i^B + V_i\leq\bar{y}}=0\), so that they actually are such that: \(y_i^B+V_i>\bar{y}\). This is because \(y_i^B+V_i>\bar{y} \Rightarrow y_i^B+V_i\leq\bar{y}-\underline{\kappa}\), when \(\underline{\kappa}>0\).

- Defiers (\(T_i=d\)) are such that \(D^1_i=\uns{y_i^B+\underline{\kappa} + V_i\leq\bar{y}}=0\) and \(D^0_i=\uns{y_i^B + V_i\leq\bar{y}}=1\), so that they actually are such that: \(\bar{y}-\underline{\kappa}<V_i+y_i^B\leq\bar{y}\).

Let’s visualize how this works in a plot. Before that, let’s generate some data according to this process. For that, let’s choose values for the new parameters.

param <- c(8,.5,.28,1500,0.9,0.01,0.05,0.05,0.05,0.1,0.1,7.98,0.5,1,0.5,0.9,0.28,0)

names(param) <- c("barmu","sigma2mu","sigma2U","barY","rho","theta","sigma2epsilon","sigma2eta","delta","baralpha","gamma","baryB","pZ","barkappa","underbarkappa","pxi","sigma2omega","rhoetaomega")set.seed(1234)

N <-1000

cov.eta.omega <- matrix(c(param["sigma2eta"],param["rhoetaomega"]*sqrt(param["sigma2eta"]*param["sigma2omega"]),param["rhoetaomega"]*sqrt(param["sigma2eta"]*param["sigma2omega"]),param["sigma2omega"]),ncol=2,nrow=2)

eta.omega <- as.data.frame(mvrnorm(N,c(0,0),cov.eta.omega))

colnames(eta.omega) <- c('eta','omega')

mu <- rnorm(N,param["barmu"],sqrt(param["sigma2mu"]))

UB <- rnorm(N,0,sqrt(param["sigma2U"]))

yB <- mu + UB

YB <- exp(yB)

Ds <- rep(0,N)

Z <- rbinom(N,1,param["pZ"])

xi <- rbinom(N,1,param["pxi"])

kappa <- ifelse(xi==1,-param["barkappa"],param["underbarkappa"])

V <- param["gamma"]*(mu-param["barmu"])+eta.omega$omega

Ds[yB+kappa*Z+V<=log(param["barY"])] <- 1

epsilon <- rnorm(N,0,sqrt(param["sigma2epsilon"]))

U0 <- param["rho"]*UB + epsilon

y0 <- mu + U0 + param["delta"]

alpha <- param["baralpha"]+ param["theta"]*mu + eta.omega$eta

y1 <- y0+alpha

Y0 <- exp(y0)

Y1 <- exp(y1)

y <- y1*Ds+y0*(1-Ds)

Y <- Y1*Ds+Y0*(1-Ds)We can now define the types variable \(T_i\):

D1 <- ifelse(yB+kappa+V<=log(param["barY"]),1,0)

D0 <- ifelse(yB+V<=log(param["barY"]),1,0)

AT <- ifelse(D1==1 & D0==1,1,0)

NT <- ifelse(D1==0 & D0==0,1,0)

C <- ifelse(D1==1 & D0==0,1,0)

D <- ifelse(D1==0 & D0==1,1,0)

Type <- ifelse(AT==1,'a',

ifelse(NT==1,'n',

ifelse(C==1,'c',

ifelse(D==1,'d',""))))

data.non.mono <- data.frame(cbind(Type,C,NT,AT,D1,D0,Y,y,Y1,Y0,y0,y1,yB,alpha,U0,eta.omega$eta,epsilon,Ds,kappa,xi,Z,mu,UB))#ggplot(data.non.mono, aes(x=V, y=yB),color(as.factor(Type))) +

# geom_point(shape=1)+

# facet_grid(.~ as.factor(kappa))

plot(yB[AT==1 & kappa==-param["barkappa"]]+V[AT==1 & kappa==-param["barkappa"]],y[AT==1 & kappa==-param["barkappa"]],pch=1,xlim=c(5,11),ylim=c(5,11),xlab='yB+V',ylab="Outcomes")

points(yB[NT==1 & kappa==-param["barkappa"]]+V[NT==1 & kappa==-param["barkappa"]],y[NT==1 & kappa==-param["barkappa"]],pch=1,col='blue')

points(yB[C==1 & kappa==-param["barkappa"]]+V[C==1 & kappa==-param["barkappa"]],y[C==1 & kappa==-param["barkappa"]],pch=1,col='red')

points(yB[D==1 & kappa==-param["barkappa"]]+V[D==1 & kappa==-param["barkappa"]],y[D==1 & kappa==-param["barkappa"]],pch=1,col='green')

abline(v=log(param["barY"]),col='red')

abline(v=log(param["barY"])+param['barkappa'],col='red')

#abline(v=log(param["barY"])-param['underbarkappa'],col='red')

text(x=c(log(param["barY"]),log(param["barY"])+param['barkappa']),y=c(5,5),labels=c(expression(bar('y')),expression(bar('y')+bar(kappa))),pos=c(2,4),col=c('red','red'),lty=c('solid','solid'))

legend(5,10.5,c('AT','NT','C','D'),pch=c(1,1,1,1),col=c('black','blue','red','green'),ncol=1)

title(expression(kappa=bar(kappa)))

plot(yB[AT==1 & kappa==param["underbarkappa"]]+V[AT==1 & kappa==param["underbarkappa"]],y[AT==1 & kappa==param["underbarkappa"]],pch=1,xlim=c(5,11),ylim=c(5,11),xlab='yB+V',ylab="Outcomes")

points(yB[NT==1 & kappa==param["underbarkappa"]]+V[NT==1 & kappa==param["underbarkappa"]],y[NT==1 & kappa==param["underbarkappa"]],pch=1,col='blue')

points(yB[C==1 & kappa==param["underbarkappa"]]+V[C==1 & kappa==param["underbarkappa"]],y[C==1 & kappa==param["underbarkappa"]],pch=1,col='red')

points(yB[D==1 & kappa==param["underbarkappa"]]+V[D==1 & kappa==param["underbarkappa"]],y[D==1 & kappa==param["underbarkappa"]],pch=1,col='green')

abline(v=log(param["barY"]),col='red')

#abline(v=log(param["barY"])-param['barkappa'],col='red')

abline(v=log(param["barY"])-param['underbarkappa'],col='red')

text(x=c(log(param["barY"]),log(param["barY"])-param['underbarkappa']),y=c(5,5),labels=c(expression(bar('y')),expression(bar('y')-underbar(kappa))),pos=c(2,2),col=c('red','red'),lty=c('solid','solid'))

legend(5,10.5,c('AT','NT','C','D'),pch=c(1,1,1,1),col=c('black','blue','red','green'),ncol=1)

title(expression(kappa=underbar(kappa)))

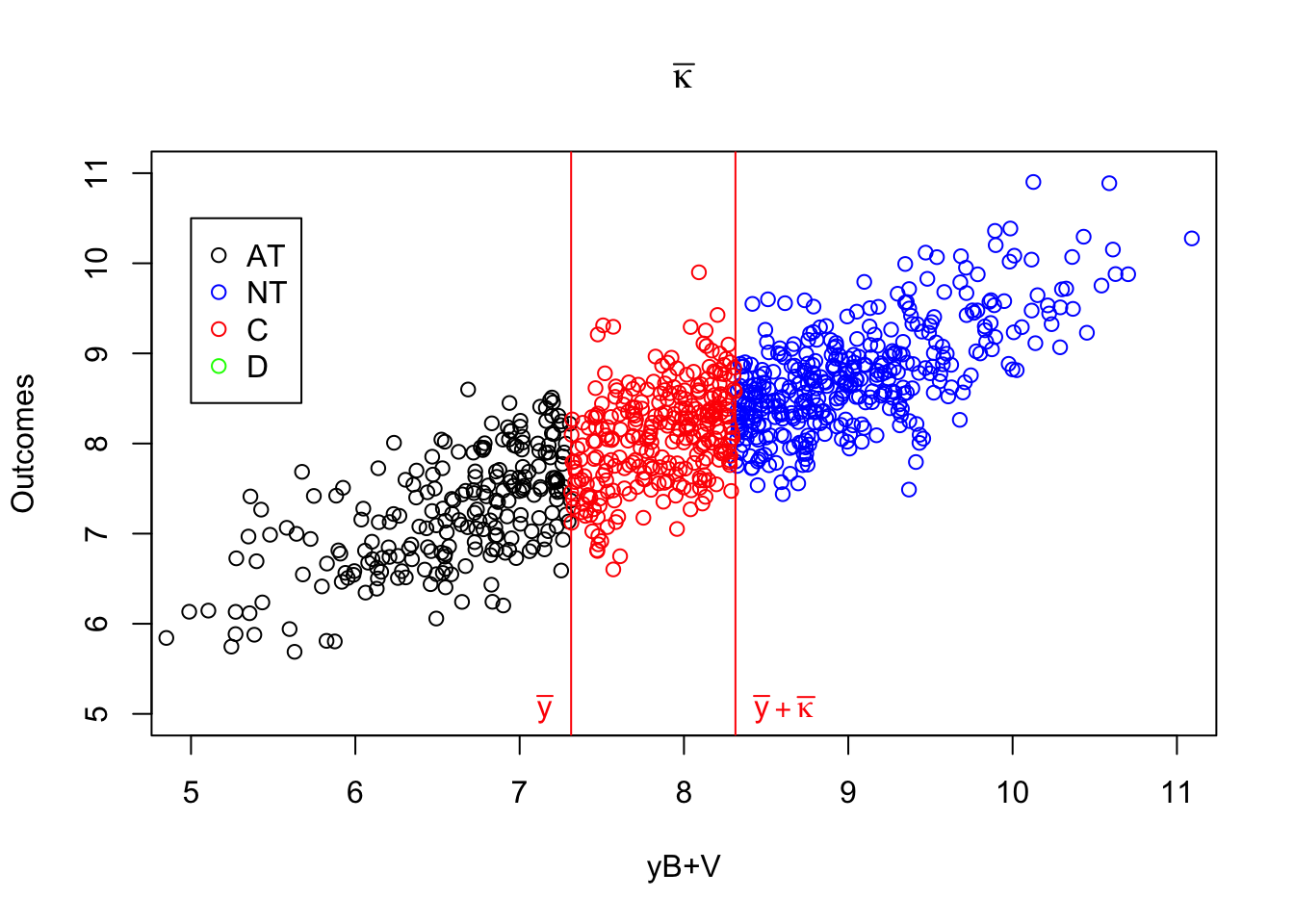

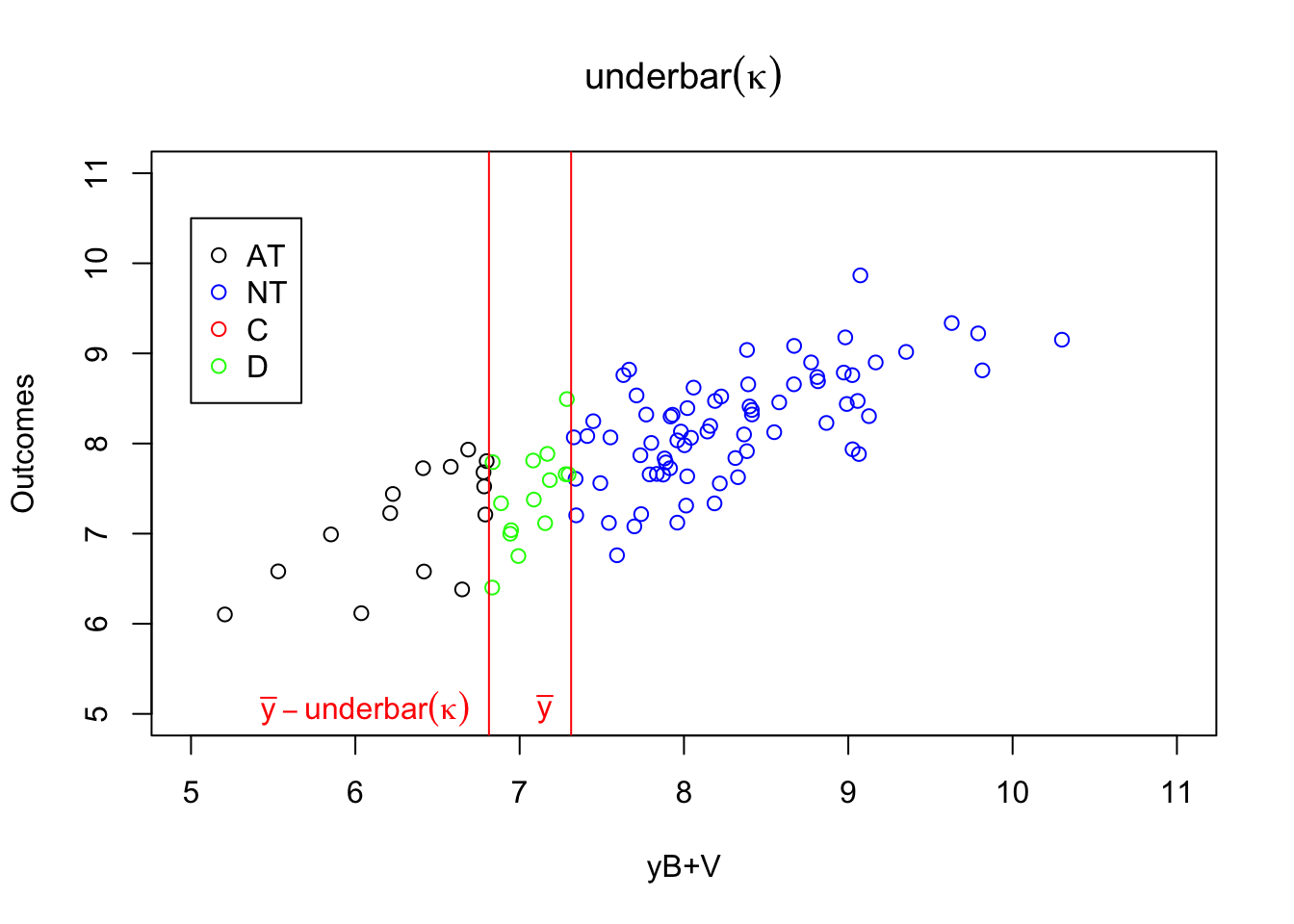

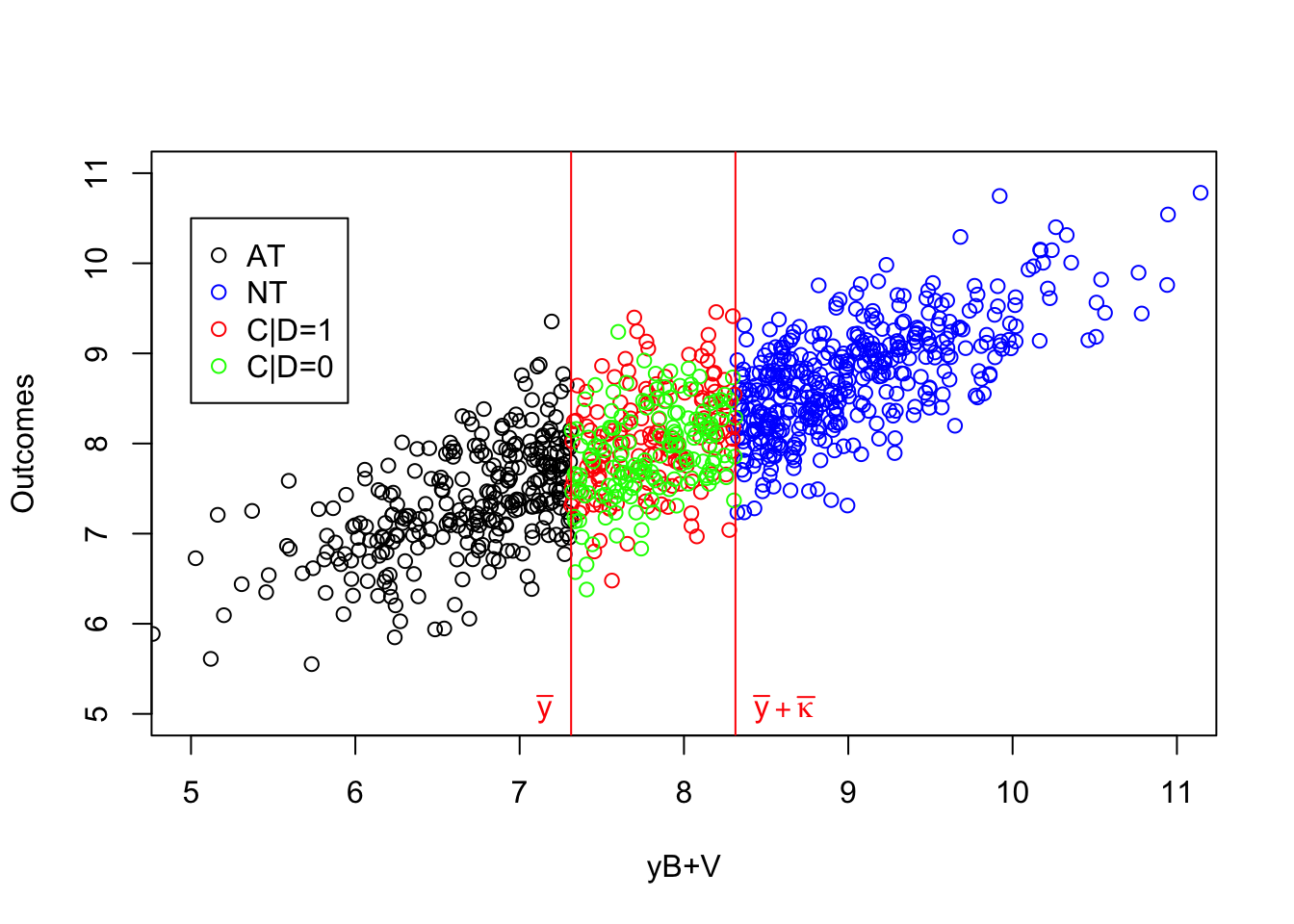

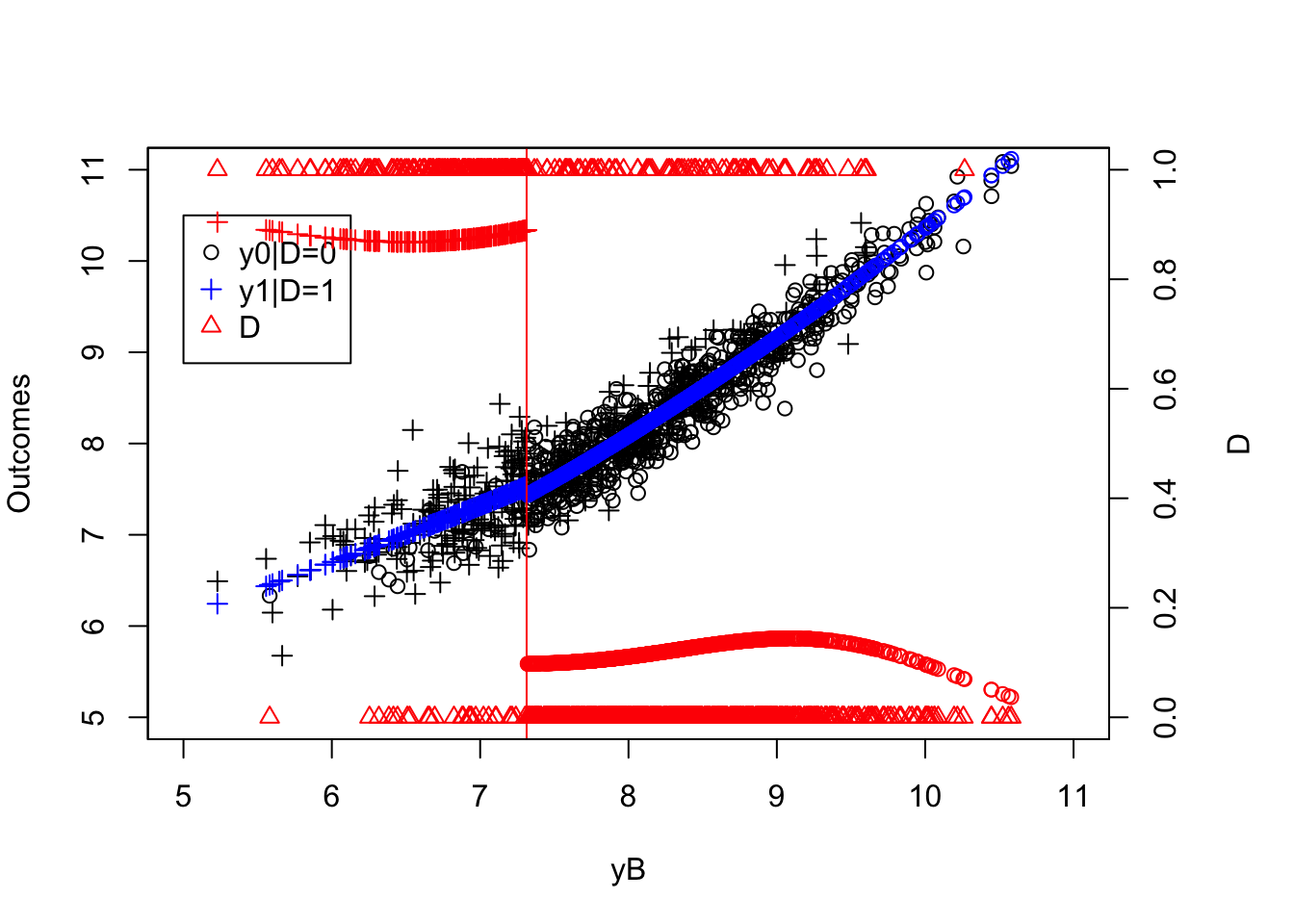

Figure 4.1: Types

As Figure 4.1 shows how the different types interact with \(\kappa_i\). When \(\kappa_i=-\bar{\kappa}\), individuals with \(y_i^B+V_i\) below \(\bar{y}\) always take the program. Even when \(Z_i=1\) and \(\bar{\kappa}\) is subtracted from their index, it is still low enough so that they get to participate. When \(y_i^B+V_i\) is in between \(\bar{y}\) and \(\bar{y}+\bar{\kappa}\), the individuals are such that their index without subtracting \(\bar{\kappa}\) is above \(\bar{y}\), but it is below \(\bar{y}\) when \(\bar{\kappa}\) is subtracted from it. These individuals participate when \(Z_i=1\) and do not participate when \(Z_i=0\): they are compliers. Individuals such that \(y_i^B+V_i\) is above \(\bar{y}+\bar{\kappa}\) will have an index above \(\bar{y}\) whether we substract \(\bar{\kappa}\) from it or not. They are never takers.

When \(\kappa_i=\underline{\kappa}\), individuals with \(y_i^B+V_i\) below \(\bar{y}-\underline{\kappa}\) always take the program. Even when \(Z_i=0\) and \(\underline{\kappa}\) is not subtracted from their index, it is still low enough so that they get to participate. When \(y_i^B+V_i\) is in between \(\bar{y}-\underline{\kappa}\) and \(\bar{y}\), the individuals are such that their index without adding \(\underline{\kappa}\) is below \(\bar{y}\), but it is above \(\bar{y}\) when \(\underline{\kappa}\) is added to it. These individuals participate when \(Z_i=0\) and do not participate when \(Z_i=1\): they are defiers. Individuals such that \(y_i^B+V_i\) is above \(\bar{y}\) will have an index above \(\bar{y}\) whether we add \(\underline{\kappa}\) from it or not. They are never takers.

4.1.2 Identification

We need several assumptions for identification in an Instrumental Variable framework. We are going to explore two sets of assumption that secure the identification of two different parameters:

- The Average Treatment Effect on the Treated (\(TT\)): identification will happen through the assumption of independence of treatment effects from potential treatment choice

- The Local Average Treatment Effect (\(LATE\))

Hypothesis 4.1 (First Stage Full Rank) We assume that the instrument \(Z_i\) has a direct effect on treatment participation:

\[\begin{align*} \Pr(D_i=1|Z_i=1)\neq\Pr(D_i=1|Z_i=0). \end{align*}\]

Example 4.2 Let’s see how this assumption works in our example. Let’s first compute the average values of \(Y_i\) and \(D_i\) as a function of \(Z_i\), for later use.

means.IV <- c(mean(Ds[Z==0]),mean(Ds[Z==1]),mean(y0[Z==0]),mean(y0[Z==1]),mean(y[Z==0]),mean(y[Z==1]),0,1)

means.IV <- matrix(means.IV,nrow=2,ncol=4,byrow=FALSE,dimnames=list(c('Z=0','Z=1'),c('D','y0','y','Z')))

means.IV <- as.data.frame(means.IV)

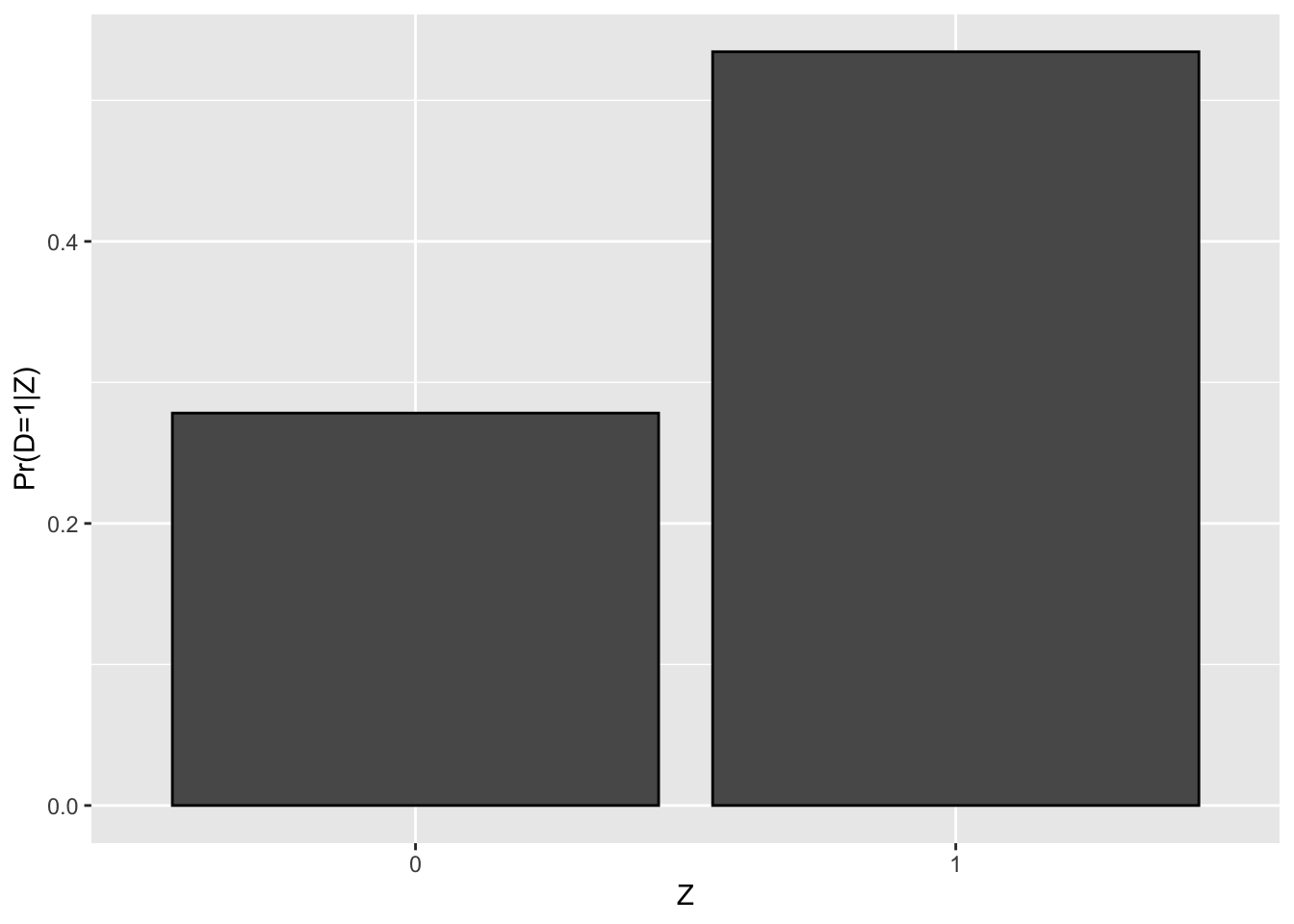

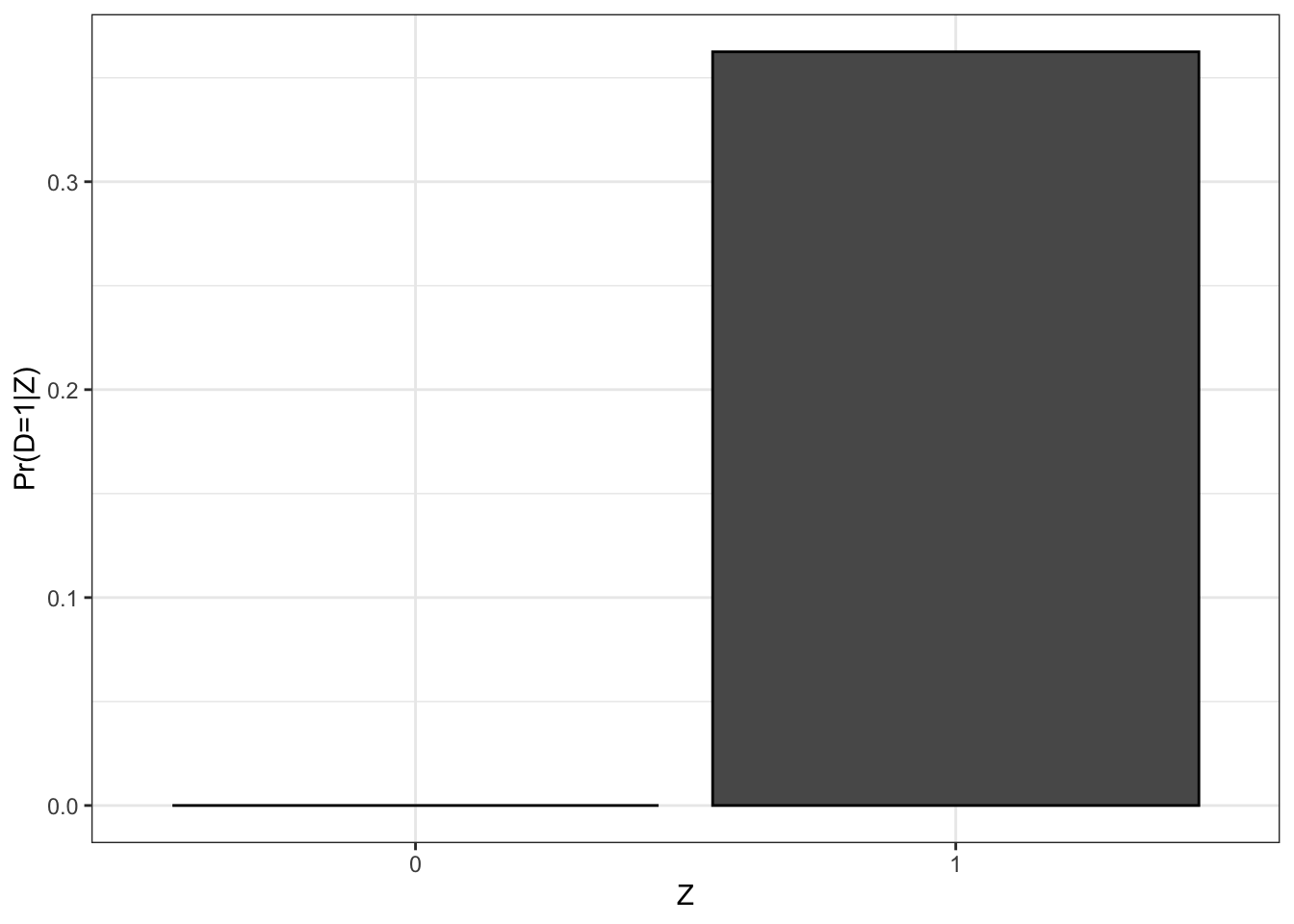

Figure 4.2: Proportion of participants as a function of \(Z_i\)

Figure 4.2 shows that the proportion of treated when \(Z_i=1\) in our sample is equal to 0.53 while the proportion of treated when \(Z_i=0\) is equal to 0.28, in accordance with Assumption 4.1. In the population, the proportion of treated when \(Z_i=1\) depends on the value of \(\kappa_i\). Let’s derive its value:

\[\begin{align*} \Pr(D_i=1|Z_i=1) & = \Pr(y_i^B+\kappa_i Z_i + V_i\leq\bar{y}|Z_i=1) \\ & = \Pr(y_i^B+\kappa_i + V_i\leq\bar{y}) \\ & = \Pr(y_i^B+ V_i\leq\bar{y}+\bar{\kappa}|\xi_i=1)\Pr(\xi_i=1) + \Pr(y_i^B+V_i\leq\bar{y}-\underline{\kappa}|\xi_i=0)\Pr(\xi_i=0) \\ & = \Pr(y_i^B+ V_i\leq\bar{y}+\bar{\kappa})p_{\xi} + \Pr(y_i^B+ V_i\leq\bar{y}-\underline{\kappa})(1-p_{\xi}) \\ & = p_{\xi}\Phi\left(\frac{\bar{y}+\bar{\kappa}-\bar{\mu}}{\sqrt{(1+\gamma^2)\sigma^2_{\mu}+\sigma^2_{U}+\sigma^2_{\omega}}}\right) + (1-p_{\xi})\Phi\left(\frac{\bar{y}-\underline{\kappa}-\bar{\mu}}{\sqrt{(1+\gamma^2)\sigma^2_{\mu}+\sigma^2_{U}+\sigma^2_{\omega}}}\right) \end{align*}\]

where the second equality follows from \(Z_i\) being independent of \((y_i^0,y_i^1,y_i^B,V_i)\), the third equality follows from \(\xi_i\) being independent from \((y_i^0,y_i^1,y_i^B,V_i,Z_i)\) and the last equality follows from the formula for the cumulative of a normal distribution. The formula for \(\Pr(D_i=1|Z_i=0)\) is the same except for \(\bar{\kappa}\) and \(\underline{\kappa}\) that are set to zero.

Let’s write two functions to compute these probabilities:

prob.D.Z.1 <- function(param){

part.1 <- param['pxi']*pnorm((log(param["barY"])+param['barkappa']-param['barmu'])/sqrt((1+param['gamma']^2)*param['sigma2mu']+param["sigma2U"]+param['sigma2omega']))

part.2 <- (1-param['pxi'])*pnorm((log(param["barY"])-param['underbarkappa']-param['barmu'])/sqrt((1+param['gamma']^2)*param['sigma2mu']+param["sigma2U"]+param['sigma2omega']))

return(part.1+part.2)

}

prob.D.Z.0 <- function(param){

part.1 <- param['pxi']*pnorm((log(param["barY"])-param['barmu'])/sqrt((1+param['gamma']^2)*param['sigma2mu']+param["sigma2U"]+param['sigma2omega']))

part.2 <- (1-param['pxi'])*pnorm((log(param["barY"])-param['barmu'])/sqrt((1+param['gamma']^2)*param['sigma2mu']+param["sigma2U"]+param['sigma2omega']))

return(part.1+part.2)

}With these functions, we know that, in the population, \(\Pr(D_i=1|Z_i=1)=\) 0.57 and \(\Pr(D_i=1|Z_i=0)=\) 0.25, which is not far from what we have found in our sample.

Our next set of assumptions imposes that the instrument has no direct effect on the outcome and that it is not correlated with all the potential outcomes. Let’s start with the exclusion restriction:

Hypothesis 4.2 (Exclusion Restriction) We assume that there is no direct effect of \(Z_i\) on outcomes:

\[\begin{align*} \forall d,z \in \left\{0,1\right\}\text{, } Y_i^{d,z} = Y_i^d. \end{align*}\]

Example 4.3 In our example, this assumption is automatically satisfied.

Indeed, \(y_i^{d,z}=y_i^0 + d(y_i^1-y_i^0)\) which is parameterized as \(y_i^{d,z}=\mu_i+\delta+U_i^0+d(\bar{\alpha}+\theta\mu_i+\eta_i)\). Since \(y_i^{d,z}\) does not depend on \(z\), we have \(y_i^{d,z} = y_i^d\), \(\forall d,z \in \left\{0,1\right\}\). The assumption would not be satisfied if \(Z_i\) entered the equations for \(y_i^0\) or \(y_i^1\). For example, if \(Z_i\) is the Vietnam draft lottery number (high or low) used by Angrist to study the impact of army experience on earnings, the exclusion restriction would not work if \(Z_i\) was directly influencing outcomes, independent of miitary experience, by example by generating a higher education level. In that case, we could have \(E_i=\alpha+\beta Z_i + v_i\), where \(E_i\) is education, and, for example, \(y_i^0=\mu_i+\delta+\lambda E_i+U_i^0\). We then have \(y_i^{d,z}=\mu_i+\delta +\lambda(\alpha+\beta z + v_i) +U_i^0+d(\bar{\alpha}+\theta\mu_i+\eta_i)\) which depends on \(z\) and thus the exclusion restriction does not hold any more.

Let us now state the independence assumption:

Hypothesis 4.3 (Independence) We assume that \(Z_i\) is independent from the other determinants of \(Y_i\) and \(D_i\):

\[\begin{align*} (Y_i^1,Y_i^0,D_i^1,D_i^0)\Ind Z_i. \end{align*}\]

Remark. Why do we say that independence from the potential outcomes is the same as independence from the other determinants of \(Y_i\) and \(D_i\)? Because the only sources of variation that remain in \(Y_i^d\) and \(D_i^z\) are the other sources of variations (that is not the treatment \(D_i=d\) nor the instrument variable \(Z_i=z\)).

Example 4.4 In our example, this assumption is also satisfied.

If we assumed that unobserved determinants of earnings contained in \(U^0_i\) are correlated with the instrument value, then we would have a problem. For example, if children that leave close to college have also rich parents, or parents that spend a lot of time with them, or parents with large networks, there probably is a correlation between distance to college and earnings in the absence of the program. For the draft lottery example, you might have that people with a high draft lottery number who have well-connected parents obtain discharges on special medical grounds. Is that a violation of the independence assumption? Actually no. Indeed, these individuals are simply going to become never takers (they avoid the draft whatever their lottery number). But \(Z_i\) is still independent from the level of connections of the parents. For the independence assumption to fail in the draft lottery number example, you would need that children of well-connected parents obtain lower lottery numbers because the lottery is rigged. In that case, since well-connected individuals would have had higher earnings even absent the lottery, there is a negative correlation between \(y_i^0\) and having a high draft lottery number (\(Z_i\)).

The last assumption we need in order to identify the Local Average Treatment Effect is that of Monotonicity. We already know this assumption:

Hypothesis 4.4 (Monotonicity) We assume that the instrument moves everyone in the population in the same direction:

\[\begin{align*} \forall i\text{, either } D^1_i\geq D_i^0 \text{ or } D^1_i\leq D_i^0. \end{align*}\]

Without loss of generality, we generally assume that \(\forall i\), \(D^1_i\geq D_i^0\). As a consequence, there are no defiers.

Example 4.5 In our example, this assumption is not satisfied.

There are defiers, as Figure 4.1 shows, when \(\xi_i = 0\) and thus \(\kappa_i=\underline{\kappa}\). Indeed, in that case, for the individuals who are such that \(\bar{y}-\underline{\kappa}<y_i^B+V_i\leq\bar{y}\), we have \(D^1_i=\uns{y_i^B+\underline{\kappa} + V_i\leq\bar{y}}=0\) and \(D^0_i=\uns{y_i^B + V_i\leq\bar{y}}=1\). This would happen for example if some people would go to college less if their house is located closer to the college, maybe for example because they have a preference not to stay at their parents’ house.

Remark. Why are defiers a problem for the instrumental variable strategy? Because the Intention to Treat Effect that measures the difference in expected outcomes at the two levels of the instrument is going to be characterized by two-way flows in and out of the program, as we have already seen with Theorem 3.10. This means that some treatment effects will have negative weights in the ITE formula. In that case, you might have a negative Intention to Treat Effect despite the treatment having positive effects for everyone, or you might under estimate the true effect of the treatment. This matters only when the treatment effects are heterogeneous.

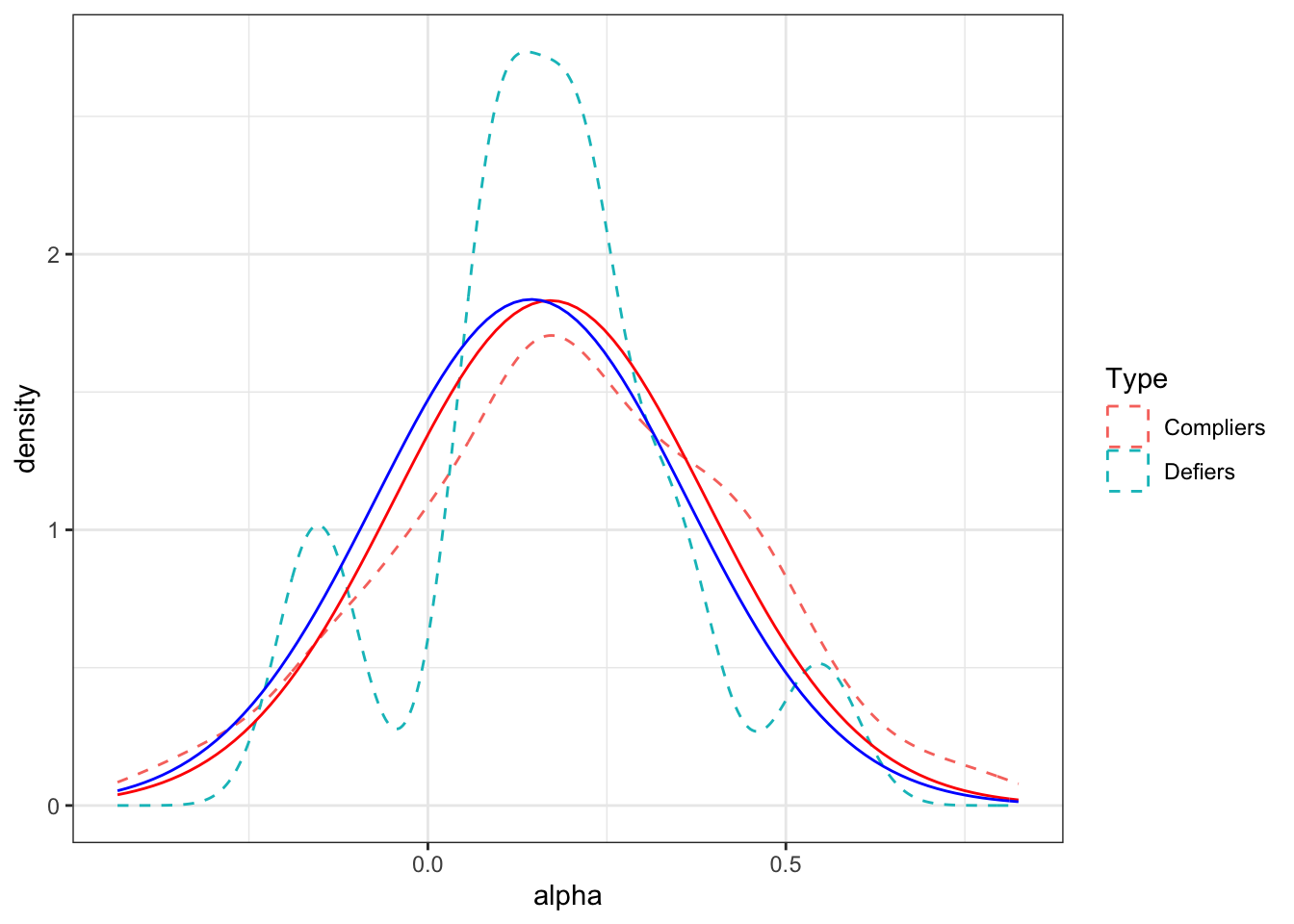

Example 4.6 Let us detail how non-monotonicity and the existence defiers act on the ITE in our example, since we now have defiers. The first very important thing to understand is that all the problems we have happend because treatment effects are heterogeneous AND they are correlated with the type of individuals: defiers and compliers do not have the same distribution of treatment effects and, case in point, they do not have the same average treatment effects. The average effects of the treatment on compliers and defiers are not the same. Let us first look at the distribution of treatment effects among compliers and defiers in the sample and in the population.

In order to derive the distribution of \(\alpha_i\) conditional on Type in the population, we need to derive the joint distribution of \(\alpha_i\) and \(y_i^B+V_i\) and use the trmtvnorm package to recover its density when it is truncated. This distribution is normal and fully characterized by its mean and covariance matrix.

\[\begin{align*} (\alpha_i,y_i^B+V_i) & \sim \mathcal{N}\left(\bar{\alpha}+\theta\bar{\mu},\bar{\mu}, \left(\begin{array}{cc} \theta^2\sigma^2_{\mu}+\sigma^2_{\eta} & (\theta+\gamma)\sigma^2_{\mu}+\rho_{\eta,\omega}\sigma^2_{\eta}\sigma^2_{\omega}\\ (\theta+\gamma)\sigma^2_{\mu}+\rho_{\eta,\omega}\sigma^2_{\eta}\sigma^2_{\omega} & (1+\gamma^2)\sigma^2_{\mu}+\sigma^2_U+\sigma^2_{\omega}\\ \end{array} \right) \right) \end{align*}\]

Let us write a function to generate them.

mean.alpha.yBV <- c(param['baralpha']+param['theta']*param['barmu'],param['barmu'])

cov.alpha.yBV <- matrix(c((param['theta']^2)*param['sigma2mu']+param['sigma2eta'],

(param['theta']+param['gamma'])*param['sigma2mu']+param['rhoetaomega']*param['sigma2eta']*param['sigma2omega'],

(param['theta']+param['gamma'])*param['sigma2mu']+param['rhoetaomega']*param['sigma2eta']*param['sigma2omega'],

(1+param['gamma']^2)*param['sigma2mu']+param['sigma2U']+param['sigma2omega']),2,2,byrow=TRUE)

# density of alpha for compliers

lower.cut.comp <- c(-Inf,log(param['barY']))

upper.cut.comp <- c(Inf,log(param['barY'])+param['barkappa'])

d.alpha.compliers <- function(x){

return(dtmvnorm.marginal(xn=x,n=1,mean=mean.alpha.yBV,sigma=cov.alpha.yBV,lower=lower.cut.comp,upper=upper.cut.comp))

}

# density of alpha for defiers

lower.cut.def <- c(-Inf,log(param['barY']-param['underbarkappa']))

upper.cut.def <- c(Inf,log(param['barY']))

d.alpha.defiers <- function(x){

return(dtmvnorm.marginal(xn=x,n=1,mean=mean.alpha.yBV,sigma=cov.alpha.yBV,lower=lower.cut.def,upper=upper.cut.def))

}Let us now plot the empirical and theoretical distributions of the treatment effects for compliers and defiers.

# building the data frame

alpha.types <- as.data.frame(cbind(alpha,C,D,AT,NT)) %>%

mutate(

Type = ifelse(AT==1,"Always Takers",

ifelse(NT==1,"Never Takers",

ifelse(C==1,"Compliers","Defiers")))

) %>%

mutate(Type = as.factor(Type))

ggplot(filter(alpha.types,Type=="Compliers" | Type=="Defiers"), aes(x=alpha, colour=Type)) +

geom_density(linetype="dashed") +

geom_function(fun = d.alpha.compliers, colour = "red") +

geom_function(fun = d.alpha.defiers, colour = "blue") +

ylab('density') +

theme_bw()

Figure 4.3: Distribution of treatment effects by Type in the sample (dashed line) and in the population (full line)

Figure 4.3 shows that the two distributions are actually very similar in our example. The distribution for the compliers is slightly above that for the defiers, meaning that the defiers should have lower expected outcomes in the population. Let us check that by computing the average outcomes of compliers and defiers both in the sample and in the population.

# sample means

mean.alpha.compliers.samp <- mean(alpha[C==1])

mean.alpha.defiers.samp <- mean(alpha[D==1])

# population means

mean.alpha.compliers.pop <- mtmvnorm(mean=mean.alpha.yBV,sigma=cov.alpha.yBV,lower=lower.cut.comp,upper=upper.cut.comp,doComputeVariance=FALSE)[[1]]

mean.alpha.defiers.pop <- mtmvnorm(mean=mean.alpha.yBV,sigma=cov.alpha.yBV,lower=lower.cut.def,upper=upper.cut.def,doComputeVariance=FALSE)[[1]]In the population, the average treatment effect for compliers is equal to 0.17 and the average treatment effect for defiers is equal to 0.14. In the sample, the average treatment effect for compliers is equal to 0.2 and the average treatment effect for defiers is equal to 0.16.

The difference between the treatment effect for compliers and defiers is a problem for the Wald estimator. Let’s look at how the Wald estimator behaves in the population (in order to avoid considerations due to sampling noise). By Theorem 3.10, the numerator of the Wald estimator is equal to the difference between the average treatment on compliers and the average treatment effect on defiers weighted by their respective proportions in the population. In order to be able to compute the Wald estimator, we need to compute the proportion of compliers and of defiers in the population. These proportions are equal to:

\[\begin{align*} \Pr(T_i=c) & = \Pr(\bar{y}< y_i^B+V_i\leq\bar{y}+\bar{\kappa}\cap\kappa_i=-\bar{\kappa}) \\ & = \Pr(\bar{y}< y_i^B+V_i\leq\bar{y}+\bar{\kappa})p_{\xi} \\ \Pr(T_i=d) & = \Pr(\bar{y}-\underline{\kappa}< y_i^B+V_i\leq\bar{y}\cap\kappa_i=\underline{\kappa}) \\ & = \Pr(\bar{y}-\underline{\kappa}< y_i^B+V_i\leq\bar{y})(1-p_{\xi}), \end{align*}\]

where the second equality follows from the fact that \(\xi\) is independent from \(y_i^B+V_i\) and uses the fact that \(\Pr(A\cap B)=\Pr(A|B)\Pr(B)\). Since \(y_i^B+V_i\) is normally distributed and we know its mean and variance, these proportions can be computed as:

\[\begin{align*} \Pr(T_i=c) & = p_{\xi}\left(\Phi\left(\frac{\bar{y}+\bar{\kappa}-\bar{\mu}}{\sqrt{(1+\gamma^2)\sigma^2_{\mu}+\sigma^2_U+\sigma^2_{\omega}}}\right) -\Phi\left(\frac{\bar{y}-\bar{\mu}}{\sqrt{(1+\gamma^2)\sigma^2_{\mu}+\sigma^2_U+\sigma^2_{\omega}}}\right)\right) \\ \Pr(T_i=d) & = (1-p_{\xi})\left(\Phi\left(\frac{\bar{y}-\bar{\mu}}{\sqrt{(1+\gamma^2)\sigma^2_{\mu}+\sigma^2_U+\sigma^2_{\omega}}}\right) -\Phi\left(\frac{\bar{y}-\underline{\kappa}-\bar{\mu}}{\sqrt{(1+\gamma^2)\sigma^2_{\mu}+\sigma^2_U+\sigma^2_{\omega}}}\right)\right). \end{align*}\]

Let’s write functions to compute these objects:

# proportion compliers

Prop.Comp <- function(param){

first <- pnorm((log(param['barY'])+param['barkappa']-param['barmu'])/(sqrt((1+param['gamma']^2)*param['sigma2mu']+param['sigma2U']+param['sigma2omega'])))

second <- pnorm((log(param['barY'])-param['barmu'])/(sqrt((1+param['gamma']^2)*param['sigma2mu']+param['sigma2U']+param['sigma2omega'])))

return(param['pxi']*(first - second))

}

# proportion defiers

Prop.Def <- function(param){

first <- pnorm((log(param['barY'])-param['barmu'])/(sqrt((1+param['gamma']^2)*param['sigma2mu']+param['sigma2U']+param['sigma2omega'])))

second <- pnorm((log(param['barY'])-param['underbarkappa']-param['barmu'])/(sqrt((1+param['gamma']^2)*param['sigma2mu']+param['sigma2U']+param['sigma2omega'])))

return((1-param['pxi'])*(first - second))

}In our example, the proportion of compliers is equal to 0.33 and the proportion of defiers is equal to 0.01. As a consequence, the population value of the numerator of the Wald estimator is equal to 0.05. In the Wald estimator, this quantity is divided by the difference between the proportion of participants when \(Z_i=1\) and when \(Z_i=0\). We have already computed this quantity earlier, but it is nice to try to compute it in a different way using the types. The difference in the proportion of participants when \(Z_i=1\) and when \(Z_i=0\) is indeed equal to the difference in the proportion of compliers and the proportion of defiers. The difference between the proportion of compliers and the proportion of defiers is equal to 0.32, while the difference between the proportion of participants when \(Z_i=1\) and when \(Z_i=0\) is equal to 0.32. It is reassuring that we find the same thing (actually, full disclosure, I did not find the same thing at first, and this help me spot a mistake in the formulas for the proportions of participants: mistakes are normal and natural and that is how we learn and grow).

So we are now equipped to compute the value of the Wald estimator in the population in our model without monotonicity. It is equal to 0.172. In practice, the bias of the Wald estimator is rather small for the average treatment effect on the compliers (remember that it is equal to 0.171). In order to understand why, it is useful to see that the bias of the Wald estimator for the average treatment effect on the compliers is equal to:

\[\begin{align*} \esp{\Delta_i^Y|T_i=c}-\Delta^Y_{Wald} & = \esp{\Delta_i^Y|T_i=c} + (\esp{\Delta_i^Y|T_i=c}-\esp{\Delta_i^Y|T_i=d})\frac{\Pr(T_i=d)}{\Pr(T_i=c)-\Pr(T_i=d)}, \end{align*}\]

where the equality follows from Theorem 3.10 and some algebra. In the absence of Monotonicity, when the impact on defiers is smaller than the impact of compliers, the Wald estimator is baised upward for the effect on the compliers (as it happens in our example). In a model in which the effect of the treatment is larger on defiers than on compliers, the Wald estimator is biased downwards for the effect on compliers because defiers make the outcome of the control group seem too good. In the extreme, when \(\esp{\Delta_i^Y|T_i=d}>\esp{\Delta_i^Y|T_i=c}(1+\frac{\Pr(T_i=c)-\Pr(T_i=d)}{\Pr(T_i=d)})\), the Wald estimator can be negative whereas the effects on compliers and on defiers are both positive. This happens when the effect on defiers is \(1+\frac{\Pr(T_i=c)-\Pr(T_i=d)}{\Pr(T_i=d)}\) times larger than the effect on compliers. In our case, that means that the effect on defiers should be 26 times larger than the effect on compliers for the Wald estimator to be negative, that is to say the effect on defiers should be equal to 4.41, really much much much larger than the effect on compliers.

From there, we are going to explore three strategies in order to identify some true effect of the treatment using the Wald estimator:

- The first strategy has been recently proposed by de Chaisemartin (2017). It is valid in a model without monotonicity.

- The second strategy assumes that the heterogeneity in treatment effects is uncorrelated to the treatment.

- The last strategy is due to Imbens and Angrist (1994) and assumes that Monotonicity holds.

Let’s review these solutions in turn.

4.1.2.1 Identification without Monotonicity

The approach delineated by de Chaisemartin (2017) does not assume away non-monotonicity. Clement instead assumes that we can divide the population of compliers in two-subpopulations: the compliers-defiers (\(T_i=cd\)) and the surviving-compliers (\(T_i=sc\)). The main assumption in Clement’s approach is that (i) the compliers-defiers are in the same proportion as the defiers and (ii) that the average effect of the treatment on the compliers defiers is equal as the average effect of the treatment on the defiers. These two assumptions can be formalized as follows:

Hypothesis 4.5 (Compliers-defiers) We assume that there exists as subpopulation of compliers that are in the same proportion as the defiers and for whom the average effect of the treatment is equal as the average effect of the treatment on the defiers:

\[\begin{align*} (T_i=c) & = (T_i=cd)\cup (T_i=sc) \\ \Pr(T_i=cd) & = \Pr(T_i=d) \\ \esp{Y^1_i-Y^0_i|T_i=cd} & = \esp{Y^1_i-Y^0_i|T_i=d}. \end{align*}\]

The first equation in Assumption 4.5 imposes that the compliers-defiers and the surviving-compliers are a partition of the population of compliers. From Assumption 4.5, we can prove the following theorem:

Theorem 4.1 (Identification of the effect on the surviving-compliers) Under Assumptions 4.1, 4.2, 4.3 and 4.5, the Wald estimator identifies the effect of the treatment on the surviving-compliers:

\[\begin{align*} \Delta^Y_{Wald} & = \Delta^Y_{sc}, \end{align*}\]

with:

\[\begin{align*} \Delta^Y_{Wald} & = \frac{\esp{Y_i|Z_i=1} - \esp{Y_i|Z_i=0}}{\Pr(D_i=1|Z_i=1)-\Pr(D_i=1|Z_i=0)}\\ \Delta^Y_{sc} & = \esp{Y^1_i-Y^0_i|T_i=sc}. \end{align*}\]

Proof. Under Assumptions 4.2 and 4.3, Theorems 3.10 and 3.12 imply that the numerator of the Wald estimator is equal to \(\Delta^Y_{ITE}\) with:

\[\begin{align*} \Delta^Y_{ITE} & = \esp{Y_i^{1}-Y_i^{0}|T_i=c}\Pr(T_i=c)-\esp{Y_i^{1}-Y_i^{0}|T_i=d}\Pr(T_i=d). \end{align*}\]

Now, we have that the effect on compliers can be decomposed in the effect on surviving-compliers and the effect on compliers-defiers using the Law of Iterated Expectations and the fact that \(T_i=sc \Rightarrow T_i=c\) and \(T_i=cd \Rightarrow T_i=c\):

\[\begin{align*} \Delta^Y_{c} & = \esp{Y_i^{1}-Y_i^{0}|T_i=sc}\Pr(T_i=sc|T_i=c)+\esp{Y_i^{1}-Y_i^{0}|T_i=cd}\Pr(T_i=cd|T_i=c), \end{align*}\]

Now, using the fact that \(\Pr(T_i=sc|T_i=c)\Pr(T_i=c)=\Pr(T_i=sc)\) and \(\Pr(T_i=cd|T_i=c)\Pr(T_i=c)=\Pr(T_i=cd)\) (because \(\Pr(A|B)\Pr(B)=\Pr(A\cap B)\) and \(\Pr(A\cap B)=\Pr(A)\) if \(A \Rightarrow B\)), we have:

\[\begin{align*} \Delta^Y_{ITE} & = \esp{Y_i^{1}-Y_i^{0}|T_i=sc}\Pr(T_i=sc)\\ & \phantom{=}+\esp{Y_i^{1}-Y_i^{0}|T_i=cd}\Pr(T_i=cd)-\esp{Y_i^{1}-Y_i^{0}|T_i=d}\Pr(T_i=d). \end{align*}\]

The second part of the right-hand side of the above equation is equal to zero by virtue of Assumption 4.5. Now, under Assumptions 4.1, 4.2 and 4.3, we know, from the proof of Theorem 3.9, that \(\Pr(D_i=1|Z_i=1)-\Pr(D_i=1|Z_i=0)=\Pr(T_i=c)-\Pr(T_i=d)\). Under Assumption 4.5, we have \(\Pr(T_i=c)=\Pr((T_i=cd)\cup(T_i=sc))=\Pr(T_i=cd)+\Pr(T_i=sc)\). Replacing \(\Pr(T_i=c)\) gives \(\Pr(D_i=1|Z_i=1)-\Pr(D_i=1|Z_i=0)=\Pr(T_i=sc)\). Dividing \(\Delta^Y_{ITE}\) by \(\Pr(T_i=sc)\) gives the result.

Remark. de Chaisemartin (2017) shows in his Theorem 2.1 that the reciprocal of Theorem 4.1 is actually valid: if there exists surviving-compliers such that their effect is estimated by the Wald estimator and their proportion is equal to the denominator of the Wald estimator, then it has to be that there exists a sub-population of compliers-defiers that are in the same proportion as the defiers and have the same average treatment effect.

Example 4.7 Let us now see if the conditions in de Chaisemartin (2017) are verified in our numerical example.

I have bad news: they are not. It is not super easy to see why, but an intuitive explanation is that the average effect on the defiers in our model is taken conditional on \(y^B_i+V_i\in]\bar{y}-\underline{\kappa},\bar{y}]\) while the effect on compliers is taken conditional on \(y^B_i+V_i\in]\bar{y},\bar{y}+\bar{\kappa}]\). These two intervals do not overlap. Since the expected value of the treatment effect conditional on \(y^B_i+V_i=v\) is monotonous in \(v\) (because both variables come from a bivariate normal distribution), then all the effects on the defiers interval are either smaller or larger than all the effects on the compliers interval, making it impossible to find a sub-population of compliers that have the same average effect of the treatment as the defiers.

More formally, it is possible to prove this result by using the concept of Marginal Treatment Effect developed by Heckman and Vytlacil (1999). I might devote a specific section of the book to the MTE and its derivations. For now, I let it as a possibility.

What can we do then? Probably the best that we can do is to find \(\kappa^*\) such that \(\Pr(\bar{y}<y_i^B+V\leq\bar{y}+\kappa^*)p_{\xi}=\Pr(T_i=d)\), that is the value such that the interval of values of \(y_i^B+V\) that are for compliers and closest to the interval for defiers and that contains the same proportion of compliers as there are defiers. This value is going to produce an average effect for compliers-defiers as close as possible to the average effect on defiers. It can be computed as follows:

\[\begin{align*} \kappa^* & = \bar{\mu}-\bar{y}+\sqrt{(1+\gamma^2)\sigma^2_{\mu}+\sigma^2_U+\sigma^2_{\omega}} \Phi^{-1}\Bigg(\Phi\left(\frac{\bar{y}-\bar{\mu}}{\sqrt{(1+\gamma^2)\sigma^2_{\mu}+\sigma^2_U+\sigma^2_{\omega}}}\right)\\ & \phantom{=}+\frac{1-p_{\xi}}{p_{\xi}}\left(\Phi\left(\frac{\bar{y}-\bar{\mu}}{\sqrt{(1+\gamma^2)\sigma^2_{\mu}+\sigma^2_U+\sigma^2_{\omega}}}\right)-\Phi\left(\frac{\bar{y}-\underline{\kappa}-\bar{\mu}}{\sqrt{(1+\gamma^2)\sigma^2_{\mu}+\sigma^2_U+\sigma^2_{\omega}}}\right)\right)\Bigg) \end{align*}\]

Let’s write functions to compute \(\kappa^*\), the implied proportion of compliers-defiers and the average effect of the treatment on compliers-defiers and on surviving-compliers:

# kappa star

KappaStar <- function(param){

prop.def <- Prop.Def(param)

prop.below.bary <- pnorm((log(param['barY'])-param['barmu'])/(sqrt((1+param['gamma']^2)*param['sigma2mu']+param['sigma2U']+param['sigma2omega'])))

st.dev.yB.V <- sqrt((1+param['gamma']^2)*param['sigma2mu']+param['sigma2U']+param['sigma2omega'])

return(param['barmu']-log(param['barY'])+st.dev.yB.V*qnorm(prop.below.bary+prop.def/param['pxi']))

}

# proportion of compliers-defiers

Prop.Comp.Def <- function(param){

first <- pnorm((log(param['barY'])+KappaStar(param)-param['barmu'])/(sqrt((1+param['gamma']^2)*param['sigma2mu']+param['sigma2U']+param['sigma2omega'])))

second <- pnorm((log(param['barY'])-param['barmu'])/(sqrt((1+param['gamma']^2)*param['sigma2mu']+param['sigma2U']+param['sigma2omega'])))

return(param['pxi']*(first - second))

}

# mean impact on compliers-defiers

lower.cut.comp.def <- c(-Inf,log(param['barY']))

upper.cut.comp.def <- c(Inf,log(param['barY'])+KappaStar(param))

mean.alpha.comp.def.pop <- mtmvnorm(mean=mean.alpha.yBV,sigma=cov.alpha.yBV,lower=lower.cut.comp.def,upper=upper.cut.comp.def,doComputeVariance=FALSE)[[1]]

# mean impact on surviving compliers

lower.cut.surv.comp <- c(-Inf,log(param['barY'])+KappaStar(param))

upper.cut.surv.comp <- c(Inf,log(param['barY'])+param['barkappa'])

mean.alpha.surv.comp.pop <- mtmvnorm(mean=mean.alpha.yBV,sigma=cov.alpha.yBV,lower=lower.cut.surv.comp,upper=upper.cut.surv.comp,doComputeVariance=FALSE)[[1]]The first have that \(\kappa^*=\) 0.0452. For this value of \(\kappa^*\), we have that \(\Pr(T_i=cd)=\) 0.0128. As expected, this is very close to the proportion of compliers in the population: \(\Pr(T_i=d)=\) 0.0128. Finally, the average treatment effect on the compliers-defiers is equal to: \(\Delta^y_{cd}=\) 0.1457. As expected, but luckily enough, since it was absolutely not sure, it is very close to the to the average treatment effect on the defiers: \(\Delta^y_{d}=\) 0.1445. So, in our model, Assumption 4.5 is almost satisfied, and so does Theorem 4.1. As a consequence, the Wald estimator is very close to the effect on the surviving-compliers. Indeed, the Wald estimator, in the population, is equal to \(\Delta^y_{Wald}=\) 0.172156, while the average effect on surviving-compliers is equal to \(\Delta^y_{sc}=\) 0.172108.

4.1.2.2 Identification under Independence of treatment effects

Another way to get around the issue of Non-Monotonicity is simply to assume away any meaningful role for treatment effect heterogeneity. One approach to that would simply be to assume that treatment effects are constant across individuals. I leave to the reader to prove that in that case, the Wald estimator would recover the treatment effect under only Independence and Exclusion Restriction. We are going to use a slightly more general approach here by assuming that treatment effect heterogeneity is unrelated to the reaction to the instrument:

Hypothesis 4.6 (Independent Treatment Effects) We assume that the treatment effect is independent from potential reactions to the instrument:

\[\begin{align*} \Delta^Y_i\Ind (D^1_i,D^0_i). \end{align*}\]

We can now prove that, under Assumption 4.6, the Wald estimator identifies the Average Treatment Effect (ATE), the average effect of the Treatment on the Treated (TT) and the average effect on compliers and on defiers. The first thing to know before we state the result is that, under Assumption 4.6, all these average treatment effects are equal to each other. This is a direct implication of the following lemma:

Lemma 4.1 (Independence of Treatment Effects from Types) Under Assumption 4.6, the treatment effect is independent from types:

\[\begin{align*} \Delta^Y_i\Ind T_i. \end{align*}\]

Proof. Lemma (4.2) in Dawid (1979) states that if \(X \Ind Y|Z\) and \(U\) is a function of \(X\), then \(U \Ind Y|Z\). Since \(T_i\) is a function of \((D^1_i,D^0_i)\) under Assumption 4.6, Lemma 4.1 follows.

A direct corollary of Lemma 4.1 is:

Corollary 4.1 (Independence of Treatment Effects and Average Effects) Under Assumption 4.6, the Average Treatment Effect (ATE), the average effect of the Treatment on the Treated (TT) and the average effect on compliers and on defiers are all equal:

\[\begin{align*} \Delta^Y_{ATE} = \Delta^Y_{TT(1)} = \Delta^Y_{TT(0)} = \Delta^Y_{c} = \Delta^Y_{d}. \end{align*}\]

with: \[\begin{align*} \Delta^Y_{TT(z)} = \esp{Y_i^1-Y_i^0|D_i=1,Z_i=z}. \end{align*}\]

Proof. Using Lemma 4.1, we have that:

\[\begin{align*} \Delta^Y_{c} = \Delta^Y_{d} = \Delta^Y_{at} =\Delta^Y_{nt}. \end{align*}\]

Because \(T_i\) is a partition, we have \(\Delta^Y_{ATE}=\Delta^Y_{c}\Pr(T_i=c)+\Delta^Y_{d}\Pr(T_i=d)+\Delta^Y_{at}\Pr(T_i=at)+\Delta^Y_{nt}\Pr(T_i=nt)=\Delta^Y_{c}\) (since \(\Pr(T_i=c)+\Pr(T_i=d)+\Pr(T_i=at)+\Pr(T_i=nt)=1\)). Finally, we also have that \(\Delta^Y_{TT(1)}=\Delta^Y_{c}\Pr(T_i=c|D_i=1,Z_i=1)+\Delta^Y_{at}\Pr(T_i=at|D_i=1,Z_i=1)=\Delta^Y_{c}\) and \(\Delta^Y_{TT(0)}=\Delta^Y_{d}\Pr(T_i=d|D_i=1,Z_i=0)+\Delta^Y_{at}\Pr(T_i=at|D_i=1,Z_i=0)=\Delta^Y_{c}\), since \((D_i=1)\cap(Z_i=1)\Rightarrow (T_i=c)\cup(T_i=at)\) and \((D_i=1)\cap(Z_i=0)\Rightarrow (T_i=d)\cup(T_i=at)\).

We are now equipped to state the final result of this section:

Theorem 4.2 (Identification under Independent Treatment Effect) Under Assumptions 4.1, 4.2, 4.3 and 4.6, the Wald estimator identifies the average effect of the Treatment on the Treated:

\[\begin{align*} \Delta^Y_{Wald} & = \Delta^Y_{TT}. \end{align*}\]

Proof. Using the formula for the Wald estimator, we have, for the two components of its numerator:

\[\begin{align*} \esp{Y_i|Z_i=1} & = \esp{Y_i^0+(Y_i^1-Y_i^0)D_i|Z_i=1} \\ & = \esp{Y_i^0|Z_i=1}+\esp{\Delta^Y_i|D_i=1,Z_i=1}\Pr(D_i=1|Z_i=1)\\ & = \esp{Y_i^0|Z_i=1}+\Delta^Y_{TT(1)}\Pr(D_i=1|Z_i=1)\\ \esp{Y_i|Z_i=0} & = \esp{Y_i^0+(Y_i^1-Y_i^0)D_i|Z_i=0} \\ & = \esp{Y_i^0|Z_i=0}+\esp{\Delta^Y_i|D_i=0,Z_i=1}\Pr(D_i=1|Z_i=0)\\ & = \esp{Y_i^0|Z_i=0}+\Delta^Y_{TT(0)}\Pr(D_i=1|Z_i=0),\\ \end{align*}\]

where the first equalities use Assumption 4.2. Now, under Assumption 4.6, Corollary 4.1 implies that \(\Delta^Y_{TT(0)}=\Delta^Y_{TT(1)}=\Delta^Y_{TT}\). We thus have that the numerator of the Wald estimator is equal to:

\[\begin{align*} \esp{Y_i|Z_i=1}-\esp{Y_i|Z_i=0} & = \Delta^Y_{TT}(\Pr(D_i=1|Z_i=1)-\Pr(D_i=1|Z_i=0))\\ & \phantom{=}+\esp{Y_i^0|Z_i=1}-\esp{Y_i^0|Z_i=0}. \end{align*}\]

Assumption 4.3 implies that \(\esp{Y_i^0|Z_i=1}=\esp{Y_i^0|Z_i=0}\). Using Assumption 4.1 proves the result.

4.1.2.3 Identification under Monotonicity

The classical approach to identification using instrumental variables is due to Imbens and Angrist (1994) and Angrist, Imbens and Rubin (1996). It rests on Assumption 4.4 or Monotonicity that we are now familiar with, that requires that the effect of the instrument on treatment participation moves everyone in the same direction.

Remark. For the rest of the section, we will assume that \(\forall i\), \(D^1_i\geq D_i^0\). It is without loss of generality, since if the initial treatment does not comply with this requirement, you can simply redefine a new treatment equal to \(-D_i\).

Under Monotonicity, there are no defiers. This is what the following lemma shows:

Lemma 4.2 Under Assumption 4.4, there are no defiers a.s.:

\[\begin{align*} \Pr(T_i=c) & = 0. \end{align*}\]

Proof. Under Assumption 4.4, \(\forall i\), \(D^1_i\geq D_i^0\). As a consequence, \(\Pr(D^1_i < D_i^0)=0\). Since defiers are defined as \(D^1_i < D_i^0\), the result follows.

In the absence of defiers, the Wald estimator identifies the average effect of the treatment on the compliers, also called the Local Average Treatment Effect:

Theorem 4.3 Under Assumptions 4.1, 4.2, 4.3 and 4.4, the Wald estimator identifies the average effect of the treatment on the compliers, also called the Local Average Treatment Effect:

\[\begin{align*} \Delta^Y_{Wald}& = \Delta^Y_{LATE}. \end{align*}\]

Proof. Using Theorem 3.9 directly proves the result.

Remark. The magic of the instrumental variables setting applies again. By moving the instrument, we are able to learn something about the causal effect of the treatment. Monotonicity is a very strong assumption though, as are Independence and Exclusion Restriction. They are very rarely met in practice. Even the case of RCTs with encouragement design, where Independence holds by design, might be affected by failures of Exclusion Restriction and/or Monotonicity.

Example 4.8 Let’s see how monotonicity works in our example.

First, we have to generate a model in which monotonicity holds. For that, we need to shut down heterogeneous reactions to the instrument. In practice, we are going to replace the participation equation in our model, which was characterized by a random coefficient, by the following one, which has a constant coefficient:

\[\begin{align*} D_i & = \uns{y_i^B-\bar{\kappa} Z_i + V_i\leq\bar{y}} \end{align*}\]

As a consequence, we have no more defiers and monotonicity holds. Let us now generate the data from the model with monotonicity:

set.seed(12345)

N <-1000

cov.eta.omega <- matrix(c(param["sigma2eta"],param["rhoetaomega"]*sqrt(param["sigma2eta"]*param["sigma2omega"]),param["rhoetaomega"]*sqrt(param["sigma2eta"]*param["sigma2omega"]),param["sigma2omega"]),ncol=2,nrow=2)

eta.omega <- as.data.frame(mvrnorm(N,c(0,0),cov.eta.omega))

colnames(eta.omega) <- c('eta','omega')

mu <- rnorm(N,param["barmu"],sqrt(param["sigma2mu"]))

UB <- rnorm(N,0,sqrt(param["sigma2U"]))

yB <- mu + UB

YB <- exp(yB)

Ds <- rep(0,N)

Z <- rbinom(N,1,param["pZ"])

V <- param["gamma"]*(mu-param["barmu"])+eta.omega$omega

Ds[yB-param["barkappa"]*Z+V<=log(param["barY"])] <- 1

epsilon <- rnorm(N,0,sqrt(param["sigma2epsilon"]))

U0 <- param["rho"]*UB + epsilon

y0 <- mu + U0 + param["delta"]

alpha <- param["baralpha"]+ param["theta"]*mu + eta.omega$eta

y1 <- y0+alpha

Y0 <- exp(y0)

Y1 <- exp(y1)

y <- y1*Ds+y0*(1-Ds)

Y <- Y1*Ds+Y0*(1-Ds)We can now define the types variable \(T_i\):

D1 <- ifelse(yB-param["barkappa"]+V<=log(param["barY"]),1,0)

D0 <- ifelse(yB+V<=log(param["barY"]),1,0)

AT <- ifelse(D1==1 & D0==1,1,0)

NT <- ifelse(D1==0 & D0==0,1,0)

C <- ifelse(D1==1 & D0==0,1,0)

D <- ifelse(D1==0 & D0==1,1,0)

Type <- ifelse(AT==1,'a',

ifelse(NT==1,'n',

ifelse(C==1,'c',

ifelse(D==1,'d',""))))

data.mono <- data.frame(cbind(Type,C,NT,AT,D1,D0,Y,y,Y1,Y0,y0,y1,yB,alpha,U0,eta.omega$eta,epsilon,Ds,Z,mu,UB))The first thing we can check is that there are no defiers. For that, let’s count the number of individuals who have \(T_i=1\). It is equal to 0.

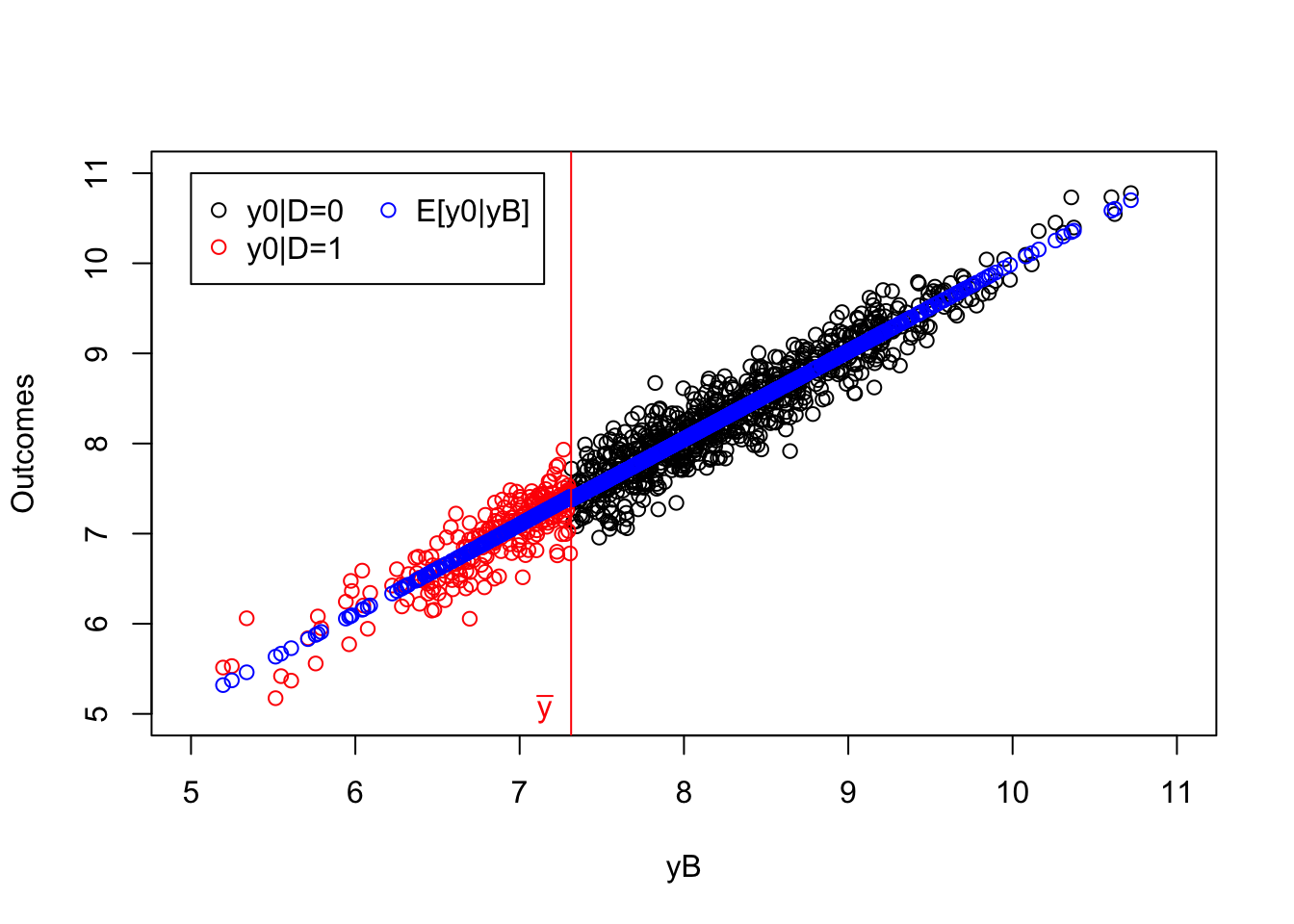

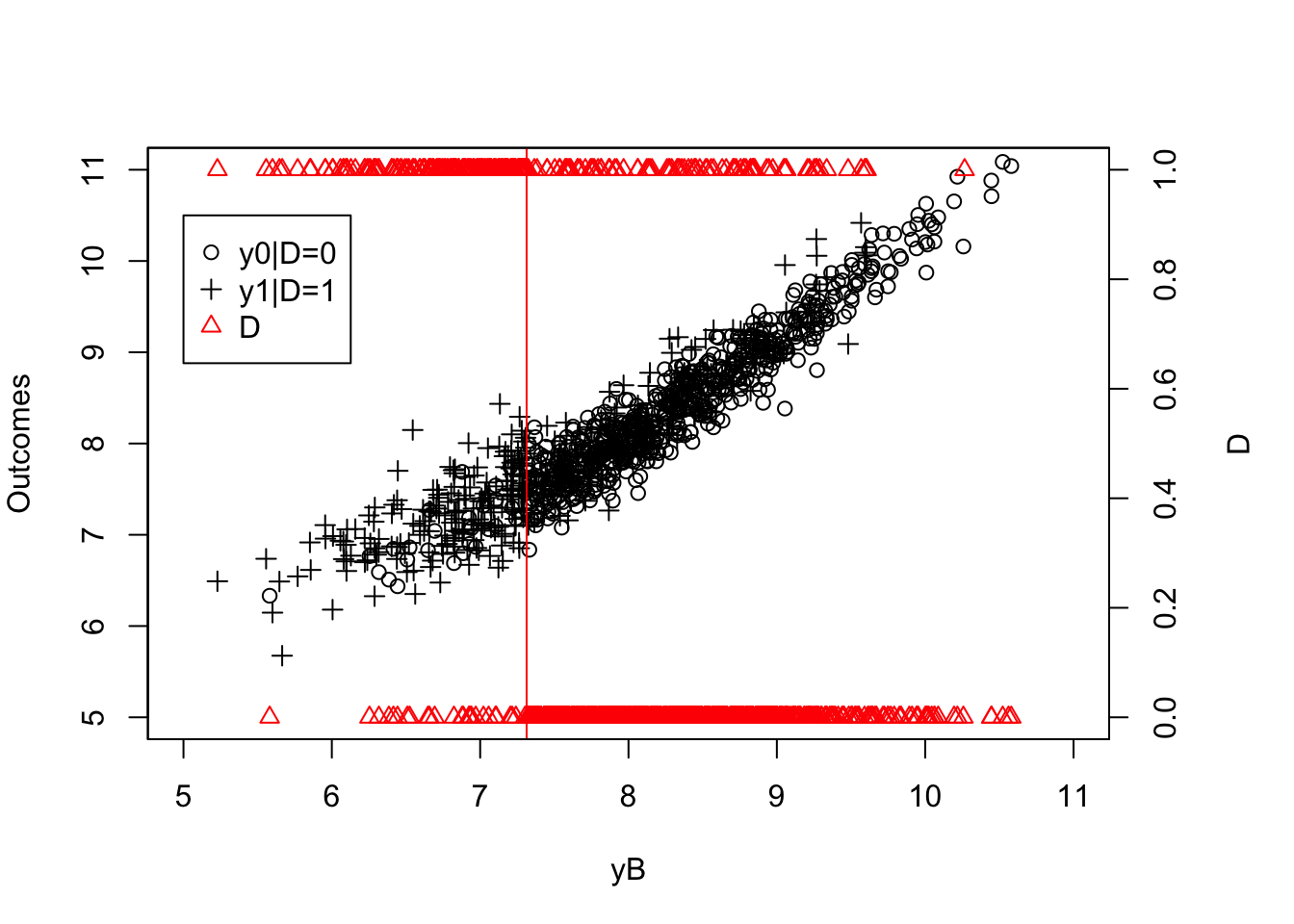

One thing that helped me understand how the IV approach under monotonicity works is the following graph:

plot(yB[AT==1]+V[AT==1],y[AT==1],pch=1,xlim=c(5,11),ylim=c(5,11),xlab="yB+V",ylab="Outcomes")

points(yB[NT==1]+V[NT==1],y[NT==1],pch=1,col='blue')

points(yB[C==1 & Ds==1]+V[C==1 & Ds==1],y[C==1 & Ds==1],pch=1,col='red')

points(yB[C==1 & Ds==0]+V[C==1 & Ds==0],y[C==1 & Ds==0],pch=1,col='green')

abline(v=log(param["barY"]),col="red")

abline(v=log(param["barY"])+param['barkappa'],col="red")

text(x=c(log(param["barY"]),log(param["barY"])+param['barkappa']),y=c(5,5),labels=c(expression(bar('y')),expression(bar('y')+bar(kappa))),pos=c(2,4),col=c("red","red"))

legend(5,10.5,c('AT','NT','C|D=1','C|D=0'),pch=c(1,1,1,1),col=c("black",'blue',"red",'green'),ncol=1)

Figure 4.4: Types under Monotonicity

What 4.4 shows is that the IV acts as a randomized controlled trial among compliers. Within the population of compliers, whether one receives the treatment or not is as good as random. If we actually knew who the compliers were, we could directly estimate the effect of the treatment by comparing the outcomes of the treated compliers to the outcomes of the untreated compliers. Actually, this approach, applied in our sample, yields an estimated treatment effect on the compliers of 0.14, whereas the simple comparison of participants and non participants would give an estimate of -0.93. In our sample, the average effect of the treatment on compliers is actually equal to 0.18.

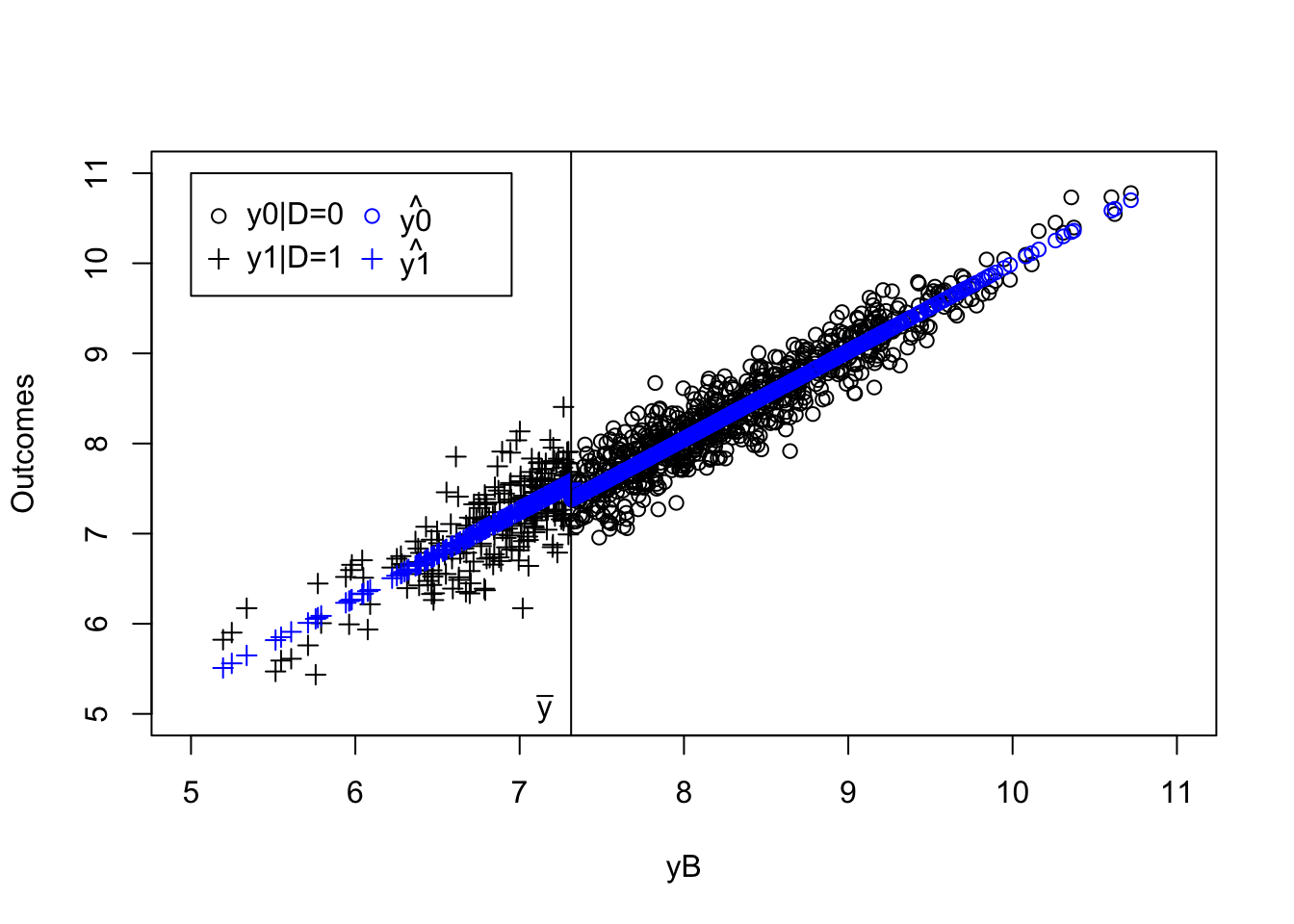

Let us finally check that Theorem 4.3 works in the population in our new model.

We need to compute the various parts of the Wald estimator and the average effect of the treatment on the compliers.

The key to understand the Wald estimator is to see that its numerator is composed of the difference between two means, with both means containing the average outcomes of always takers and never takers weighted by their respective proportions in the population, as shown in the proof of Theorem 4.3.

These two means cancel out, leaving only the differences in the means of the compliers in and out of the treatment, weighted by their proportion in the population.

The denominator of the Wald estimator simply provides an estimate of the proportion of compliers.

In order to illustrate these intuitions in our example, I am going to use the formula for a truncated multivariate normal variable and the package tmvtnorm.

The most important thing to notice here is that \((y^0_i,y^1_i,y_i^B+V_i) \sim \mathcal{N}\left(\bar{\mu}+\delta,\bar{\mu}(1+\theta)+\delta+\bar{\alpha},\bar{\mu},\mathbf{C}\right)\) with:

\[\begin{align*} \mathbf{C} &= \left(\begin{array}{ccc} \sigma^2_{\mu}+\rho^2\sigma^2_{U} +\sigma^2_{\epsilon} & (1+\theta)\sigma^2_{\mu}+\rho^2\sigma^2_U + \sigma^2_{\epsilon} & (1+\gamma)\sigma^2_{\mu}+\rho\sigma^2_U \\ (1+\theta)\sigma^2_{\mu}+\rho^2\sigma^2_U + \sigma^2_{\epsilon} & (1+\theta^2)\sigma^2_{\mu}+\rho^2\sigma^2_{U} +\sigma^2_{\epsilon} + \sigma^2_{\eta} & (1+\theta+\gamma)\sigma^2_{\mu}+\rho\sigma^2_U+\rho_{\eta,\omega}\sigma^2_{\eta}\sigma^2_{\omega} \\ (1+\gamma)\sigma^2_{\mu}+\rho\sigma^2_U & (1+\theta+\gamma)\sigma^2_{\mu}+\rho\sigma^2_U+\rho_{\eta,\omega}\sigma^2_{\eta}\sigma^2_{\omega} & (1+\gamma^2)\sigma^2_{\mu}+\sigma^2_U+\sigma^2_{\omega} \\ \end{array} \right) \end{align*}\]

We now simply have to derive the mean outcomes and proportions of each type in the population in order to form the Wald estimator. Let me first derive the joint distribution of the portential outcomes and the means and proportions of each type in the population.

mean.y0.y1.yBV <- c(param['barmu']+param['delta'],param['barmu']*(1+param['theta'])+param['delta']+param['baralpha'],param['barmu'])

cov.y0.y1.yBV <- matrix(c(param['sigma2mu']+param['rho']^2*param['sigma2U']+param['sigma2epsilon'],

(1+param['theta'])*param['sigma2mu']+param['rho']^2*param['sigma2U']+param['sigma2epsilon'],

(1+param['gamma'])*param['sigma2mu']+param['rho']*param['sigma2U'],

(1+param['theta'])*param['sigma2mu']+param['rho']^2*param['sigma2U']+param['sigma2epsilon'],

(1+param['theta']^2)*param['sigma2mu']+param['rho']^2*param['sigma2U']+param['sigma2epsilon']+param['sigma2eta'],

(1+param['theta']+param['gamma'])*param['sigma2mu']+param['rho']*param['sigma2U']+param['rhoetaomega']*param['sigma2eta']*param['sigma2omega'],

(1+param['gamma'])*param['sigma2mu']+param['rho']*param['sigma2U'],

(1+param['theta']+param['gamma'])*param['sigma2mu']+param['rho']*param['sigma2U']+param['rhoetaomega']*param['sigma2eta']*param['sigma2omega'],

(1+param['gamma']^2)*param['sigma2mu']+param['sigma2U']+param['sigma2omega']),3,3,byrow=TRUE)

# cuts

#always takers

lower.cut.at <- c(-Inf,-Inf,-Inf)

upper.cut.at <- c(Inf,Inf,log(param['barY']))

# compliers

lower.cut.comp <- c(-Inf,-Inf,log(param['barY']))

upper.cut.comp <- c(Inf,Inf,log(param['barY'])+param['barkappa'])

# never takers

lower.cut.nt <- c(-Inf,-Inf,log(param['barY'])+param['barkappa'])

upper.cut.nt <- c(Inf,Inf,Inf)

# means by types

#always takers

mean.y1.at.pop <- mtmvnorm(mean=mean.y0.y1.yBV,sigma=cov.y0.y1.yBV,lower=lower.cut.at,upper=upper.cut.at,doComputeVariance=FALSE)[[1]][[2]]

mean.y0.at.pop <- mtmvnorm(mean=mean.y0.y1.yBV,sigma=cov.y0.y1.yBV,lower=lower.cut.at,upper=upper.cut.at,doComputeVariance=FALSE)[[1]][[1]]

# never takers

mean.y1.nt.pop <- mtmvnorm(mean=mean.y0.y1.yBV,sigma=cov.y0.y1.yBV,lower=lower.cut.nt,upper=upper.cut.nt,doComputeVariance=FALSE)[[1]][[2]]

mean.y0.nt.pop <- mtmvnorm(mean=mean.y0.y1.yBV,sigma=cov.y0.y1.yBV,lower=lower.cut.nt,upper=upper.cut.nt,doComputeVariance=FALSE)[[1]][[1]]

#compliers

mean.y1.comp.pop <- mtmvnorm(mean=mean.y0.y1.yBV,sigma=cov.y0.y1.yBV,lower=lower.cut.comp,upper=upper.cut.comp,doComputeVariance=FALSE)[[1]][[2]]

mean.y0.comp.pop <- mtmvnorm(mean=mean.y0.y1.yBV,sigma=cov.y0.y1.yBV,lower=lower.cut.comp,upper=upper.cut.comp,doComputeVariance=FALSE)[[1]][[1]]

# Proportion of each types

# always takers

prop.at.pop <- ptmvnorm.marginal(log(param['barY']),n=3,mean=mean.y0.y1.yBV,sigma=cov.y0.y1.yBV)[[1]]

# never takers

prop.nt.pop <- 1-ptmvnorm.marginal(log(param['barY'])+param['barkappa'],n=3,mean=mean.y0.y1.yBV,sigma=cov.y0.y1.yBV)[[1]]

# compliers

prop.comp.pop <- ptmvnorm.marginal(log(param['barY'])+param['barkappa'],n=3,mean=mean.y0.y1.yBV,sigma=cov.y0.y1.yBV)[[1]]-ptmvnorm.marginal(log(param['barY']),n=3,mean=mean.y0.y1.yBV,sigma=cov.y0.y1.yBV)[[1]]

# LATE

late.pop <- mean.y1.comp.pop-mean.y0.comp.pop

late.prop.comp.pop <- late.pop*prop.comp.pop

# Wald

num.Wald.pop <- (mean.y1.comp.pop*prop.comp.pop+mean.y1.at.pop*prop.at.pop+mean.y0.nt.pop*prop.nt.pop-(mean.y0.comp.pop*prop.comp.pop+mean.y1.at.pop*prop.at.pop+mean.y0.nt.pop*prop.nt.pop))

denom.Wald.pop <- (prop.at.pop+prop.comp.pop-prop.at.pop)

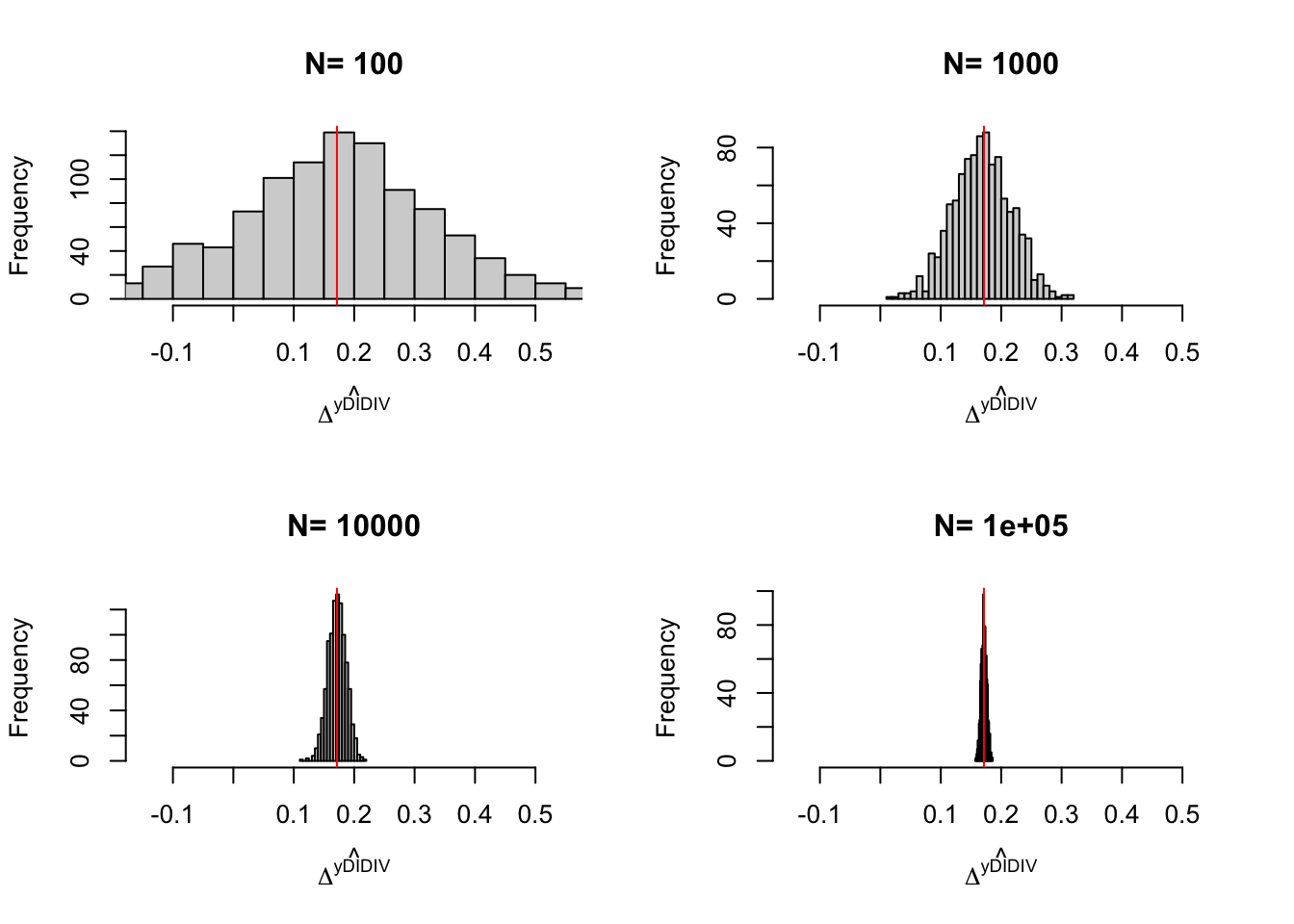

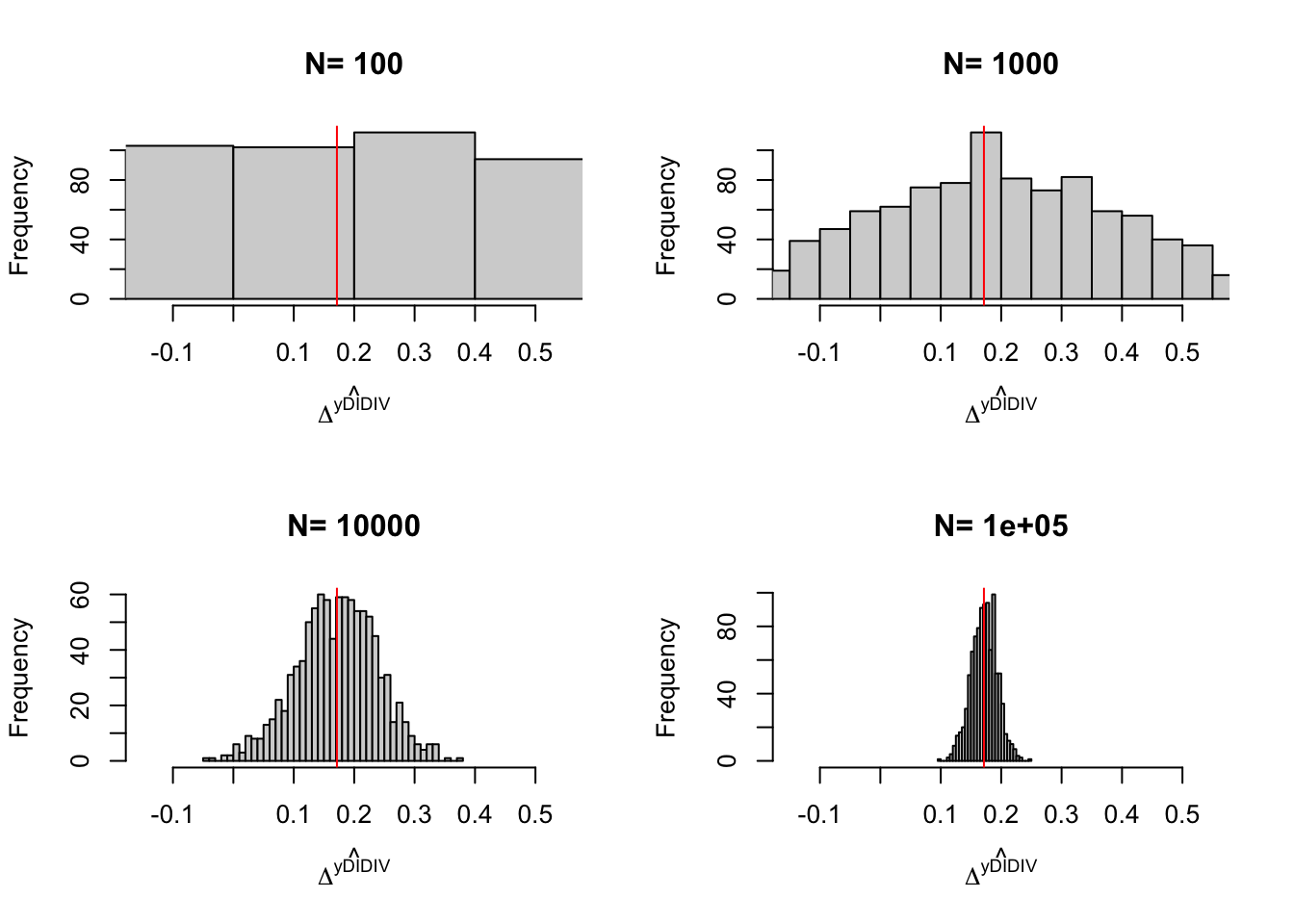

Wald.pop <- num.Wald.pop/denom.Wald.popWe are now equipped to compute the Wald estimator in the population. Before that, let us compute the LATE. We have \(\Delta^Y_{LATE} =\) 0.179. The Wald estimator is equal to \(\Delta^Y_{Wald} =\) 0.179. They are obviously equal. This is because the numerator of the Wald is equal to the product of the LATE multiplied by the proportion of compliers (which is equal to 0.066). This is because the outcomes of never takers and always takers cancel out on each separate term of the numerator of the Wald estimator. Indeed, we have that the numerator of the Wald estimator is equal to: 0.066.

4.1.3 Estimation

Estimation of the LATE under the IV assumptions closely follows the same steps that we have delineated in Section 3.4.2:

- First stage regression of \(D_i\) on \(Z_i\): this estimates the impact of the instrument on participation into the program and estimates the proportion of compliers.

- Reduced form regression of \(Y_i\) on \(Z_i\): this estimates the impact of the instrument on outcomes, a.k.a the ITE.

- Structural regression of \(Y_i\) on \(D_i\) using \(Z_i\) as an instrument, which estimates the LATE.

Let’s take these three steps in turn.

4.1.3.1 First stage regression

The first stage regression regresses \(D_i\) on \(Z_i\) and thus estimates the impact of the instrument on treatment participation, which is equal to the proportion of compliers. It can be run using the With/Without estimator or OLS (both are numerically equivalent as Lemma A.3 shows) or OLS conditioning on observed covariates.

Example 4.9 Let’s see how these three approaches fare in our example.

# WW first stage

WW.First.Stage.IV <- mean(Ds[Z==1])-mean(Ds[Z==0])

# Simple OLS

OLS.D.Z.IV <- lm(Ds~Z)

OLS.First.Stage.IV <- coef(OLS.D.Z.IV)[[2]]

# OLS conditioning on yB

OLS.D.Z.yB.IV <- lm(Ds~Z+yB)

OLSX.First.Stage.IV <- coef(OLS.D.Z.yB.IV)[[2]]The WW estimator of the first stage impact of \(Z_i\) on \(D_i\) is equal to 0.374. The OLS estimator of the first stage impact of \(Z_i\) on \(D_i\) is equal to 0.374. The OLS estimator of the first stage impact of \(Z_i\) on \(D_i\) conditioning on \(y^B_i\) is equal to 0.339. Remember that the true proportion of compliers in the population in our model is equal to 0.366.

4.1.3.2 Reduced form regression

The reduced form regression regresses \(Y_i\) on \(Z_i\) and thus estimates the impact of the instrument on outcomes, which is equal to the ITE. It can be run using the With/Without estimator or OLS (both are numerically equivalent as Lemma A.3 shows) or OLS conditioning on observed covariates.

Example 4.10 Let’s see how these three approaches fare in our example.

# WW reduced form

WW.Reduced.Form.IV <- mean(y[Z==1])-mean(y[Z==0])

# Simple OLS

OLS.y.Z.IV <- lm(y~Z)

OLS.Reduced.Form.IV <- coef(OLS.y.Z.IV)[[2]]

# OLS conditioning on yB

OLS.y.Z.yB.IV <- lm(y~Z+yB)

OLSX.Reduced.Form.IV <- coef(OLS.y.Z.yB.IV)[[2]]The WW estimator of the reduced form impact of \(Z_i\) on \(y_i\) is equal to -0.029. The OLS estimator of the reduced form impact of \(Z_i\) on \(y_i\) is equal to -0.029. The OLS estimator of the reduced form impact of \(Z_i\) on \(y_i\) conditioning on \(y^B_i\) is equal to 0.058. Remember that the true ITE in the population in our model is equal to 0.066.

4.1.3.3 Structural regression

The final step of the analysis is to estimate the impact of \(D_i\) on \(Y_i\) using \(Z_i\) as an instrument. This can be done either by directly using the Wald estimator, by dividing the estimate of the reduced form by the result of the first stage, or by directly using the IV estimator (which is equivalent to the Wald estimator as Theorem 3.15 shows) or the IV estimator conditional on covariates.

Example 4.11 Let’s see how these four approaches fare in our example.

# Wald structural form

Wald.Structural.Form.IV <- (mean(y[Z==1])-mean(y[Z==0]))/(mean(Ds[Z==1])-mean(Ds[Z==0]))

# Simple IV

TSLS.y.D.Z.IV <- ivreg(y~Ds|Z)

TSLS.Structural.Form.IV <- coef(TSLS.y.D.Z.IV)[[2]]

# IV conditioning on yB

TSLS.y.D.Z.yB.IV <- ivreg(y~Ds+yB|Z+yB)

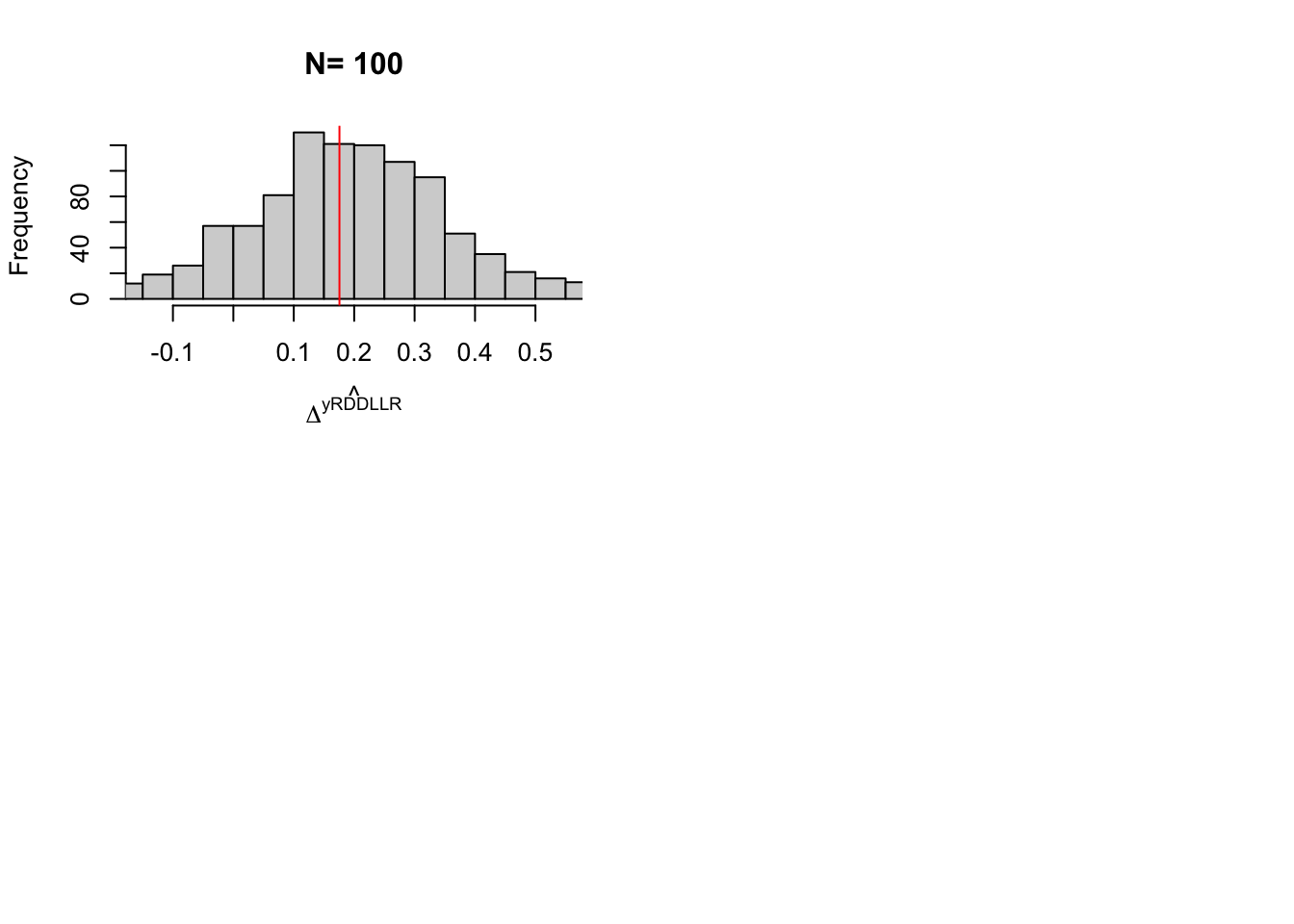

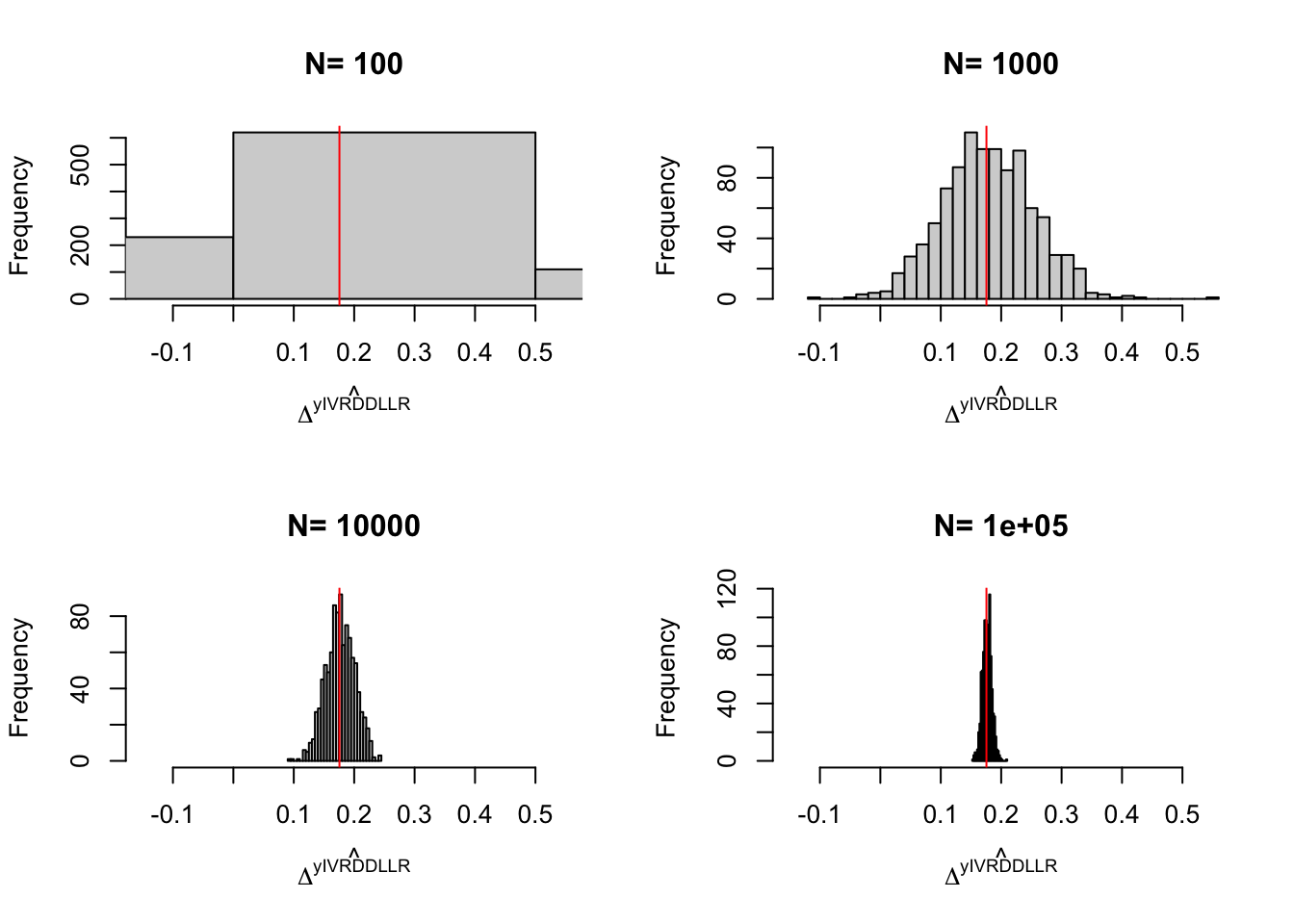

TSLSX.Structural.Form.IV <- coef(TSLS.y.D.Z.yB.IV)[[2]]The Wald estimator of the LATE is equal to \(\hat{\Delta}_{Wald}^{y}=\) -0.078. The IV estimator of the LATE is equal to \(\hat{\Delta}_{IV}^{y}=\) -0.078, and is numerically identical to the Wald estimator, as expected. The IV estimator of the LATE conditioning on \(y_i^B\) is equal to 0.172. Remember that the true LATE in the population in our model is equal to 0.179.

Remark. The last thing we might want to check is what the sampling noise of the IV estimator looks like and whether it is reduced by conditioning on observed covariates.

Example 4.12 Let’s see how sampling noise moves in our example.

Do it

4.1.4 Estimation of sampling noise

Remark. The framework we have seen here as been extended to multivalued instruments or treatments by several papers. Imbens and Angrist (1994) extend the framework to an ordered instrument. They show that the 2SLS estimator is a weighted average of LATEs for each values of the instrument, with positive weights summing to one. Angrist and Imbens (1995) extend the framework to he case where the treatment is an ordered discrete variable and there are multiple dichotomous instruments. They again show that the 2SLS estimator is a weighted average of LATEs with positive weights summing to one. Heckman and Vytlacil (1999) extend the framework to a case with a continuous instrument and show that one can the define a Marginal Treatment Effect (or MTE) that is equal to the effect of the treatment on individuals that have the same unobserved propensity to take the treatment. They show that the MTE can be identified by a limiting form of Wald estimator that they call a Local Instrumental Variable estimator. They also show that average treatment effect parameters such as TT, ATE and LATE are all weighted averages of the MTE, with positive weights summing to one. Under strong support conditions on the side of the instrument, one can thus in principle recover all treatment effect parameters with a continuous instrument.

Remark. One important concern with the first stage regression is that of weak instruments. When Assumption 4.1 does not hold and the impact on the instrument on take up is actually zero in the population, the Wald estimator is not well-defined.

Expand

4.2 Regression Discontinuity Designs

Regression Discontinuity Designs emerge in situations where there is a discontinuity in the probability of receiving the treatment. If there is also a discontinuity in outcomes, it is interpreted as the effect of the treatment. We distinguish two RD Designs:

- Sharp Designs (the probability of receiving the treatment moves from 0 to 1 at the discontinuity,

- Fuzzy Designs (the probability of receiving the treatment moves from values strictly between 0 and 1 at the discontinuity.

Let’s examine both of these configurations in turn.

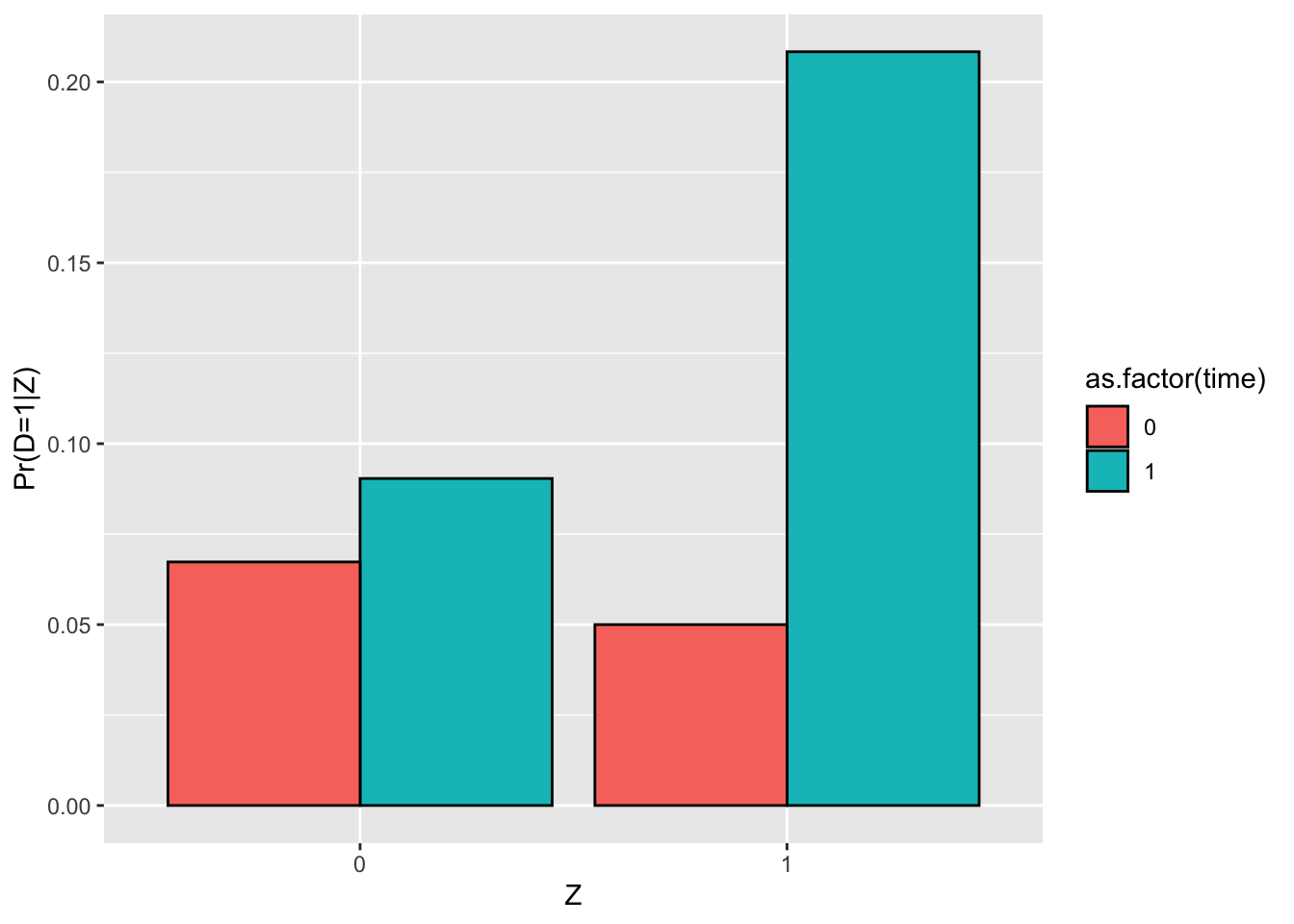

4.2.2 Fuzzy Regression Discontinuity Designs

We say that a Regression Discontinuity Design is fuzzy when the probability of obtaining the treatment does not move from \(0\) to \(1\) when moving across the threshold of the running variable, but moves from some probability to a different one, when both are strictly positive and strictly smaller than one. This means that there is some event that discontinuously affects the enrollment of some people but not the enrollment of others. We thus are going to see compliers, always takers and never takers, as we did with Instrumental Variables. In this section, we are going to study the identification, estimation and estimation of sampling noise of treatment effects in the Fuzzy Regression Discontinuity Design.

4.2.2.1 Identification

The first assumption characterizing the Fuzzy Regression Discontinuity Design is the asumption that the probability of receiving treatment is discontinuous:

Hypothesis 4.9 (Fuzzy RDD Design) There exists a running variable \(Z_i\) and a threshold \(\bar{z}\) such that:

\[\begin{align*} \lim_{e\rightarrow 0^{+}}\Pr(D_i=1|Z_i=\bar{z}-e) & \neq \lim_{e\rightarrow 0^{+}}\Pr(D_i=1|Z_i=\bar{z}+e). \end{align*}\]

Example 4.21 Let’s see how this plays in our example. Let’s first simulate some data:

\[\begin{align*} y_i^1 & = y_i^0+\bar{\alpha}+\theta\mu_i+\eta_i \\ y_i^0 & = \mu_i+\delta+U_i^0+\gamma(y_i^B-\bar{y})^2 \\ U_i^0 & = \rho U_i^B+\epsilon_i \\ y_i^B & =\mu_i+U_i^B \\ U_i^B & \sim\mathcal{N}(0,\sigma^2_{U}) \\ D_i & = \uns{y_i^B\leq\bar{y} \land V_i\leq\kappa \lor y_i^B>\bar{y} \land V_i>\kappa} \\ (\eta_i,\omega_i,V_i) & \sim\mathcal{N}(0,0,0,\sigma^2_{\eta},\sigma^2_{\omega},\sigma^2_{\mu}+\sigma^2_{U},0,0,0). \end{align*}\]

param <- c(param,1)

names(param)[[length(param)]] <- "kappa"

N <- 1000

set.seed(123)

mu <- rnorm(N,param["barmu"],sqrt(param["sigma2mu"]))

UB <- rnorm(N,0,sqrt(param["sigma2U"]))

yB <- mu + UB

YB <- exp(yB)

Ds <- rep(0,N)

V <- rnorm(N,0,sqrt(param["sigma2mu"]+param["sigma2U"]))

Ds[((yB<=log(param["barY"])) & (V<=param["kappa"])) | ((yB>log(param["barY"])) & (V>param["kappa"])) ] <- 1

epsilon <- rnorm(N,0,sqrt(param["sigma2epsilon"]))

eta<- rnorm(N,0,sqrt(param["sigma2eta"]))

U0 <- param["rho"]*UB + epsilon

y0 <- mu + U0 + param["delta"] + param["gamma"]*(yB-param["baryB"])^2

alpha <- param["baralpha"]+ param["theta"]*mu + eta

y1 <- y0+alpha

Y0 <- exp(y0)

Y1 <- exp(y1)

y <- y1*Ds+y0*(1-Ds)

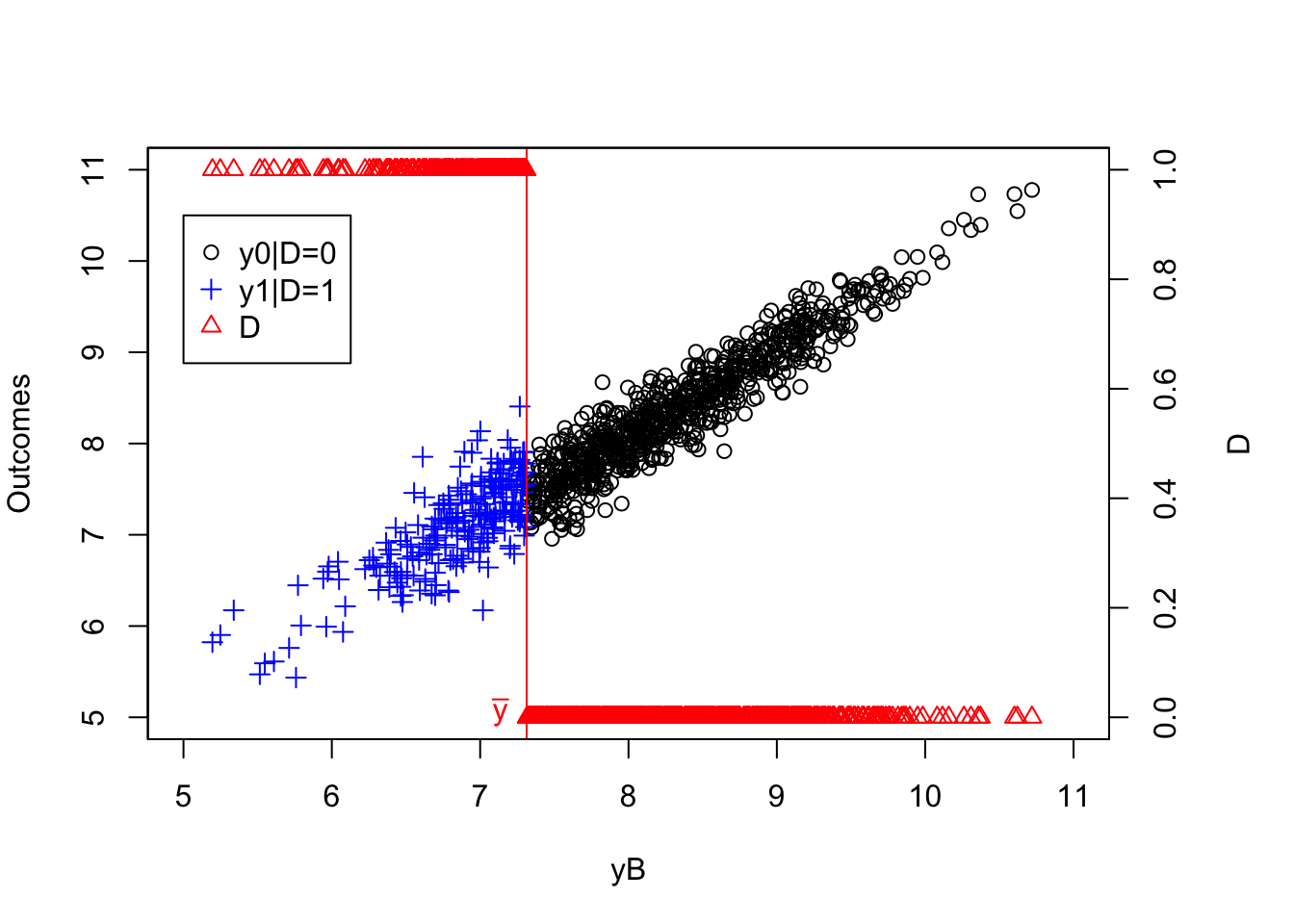

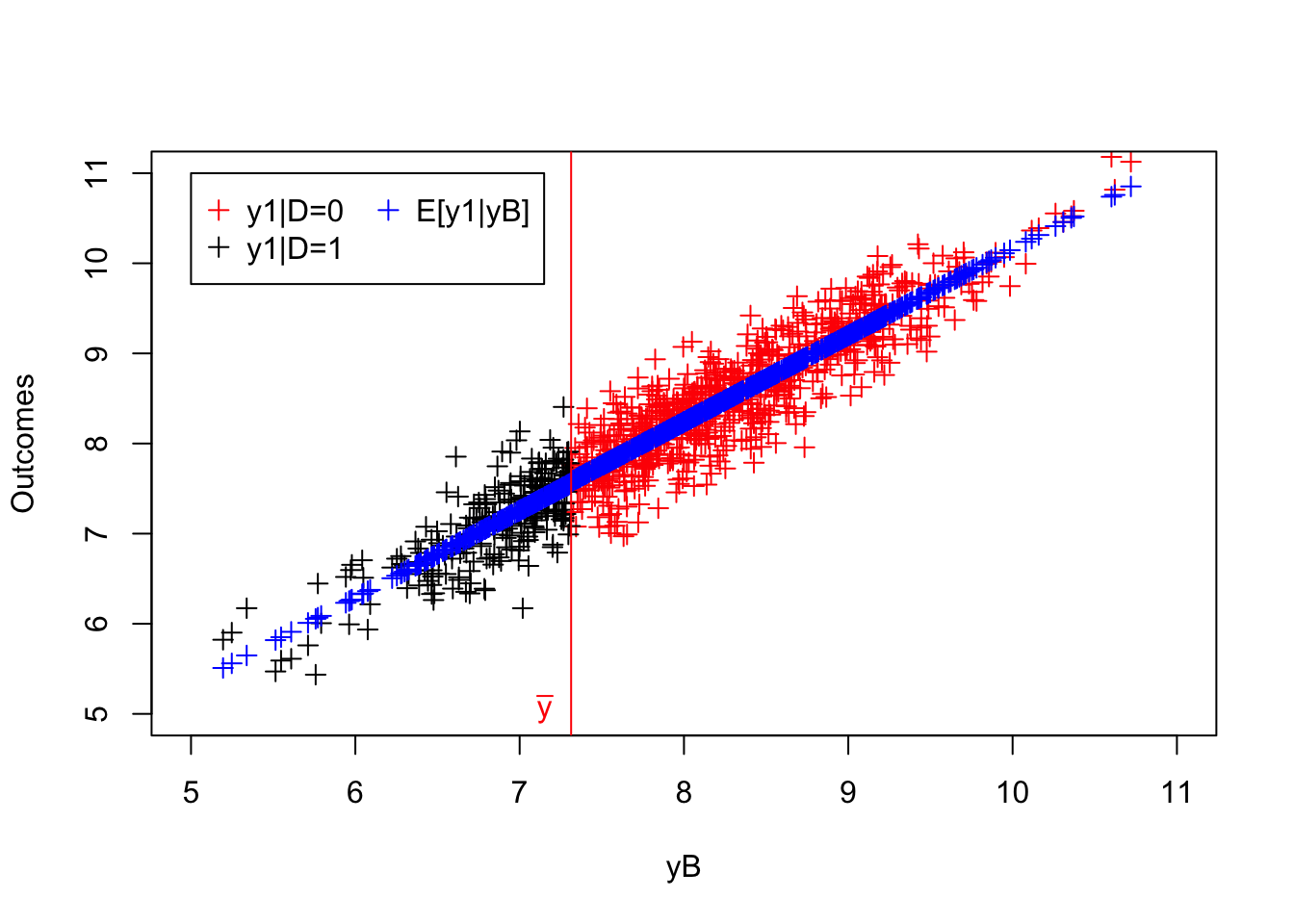

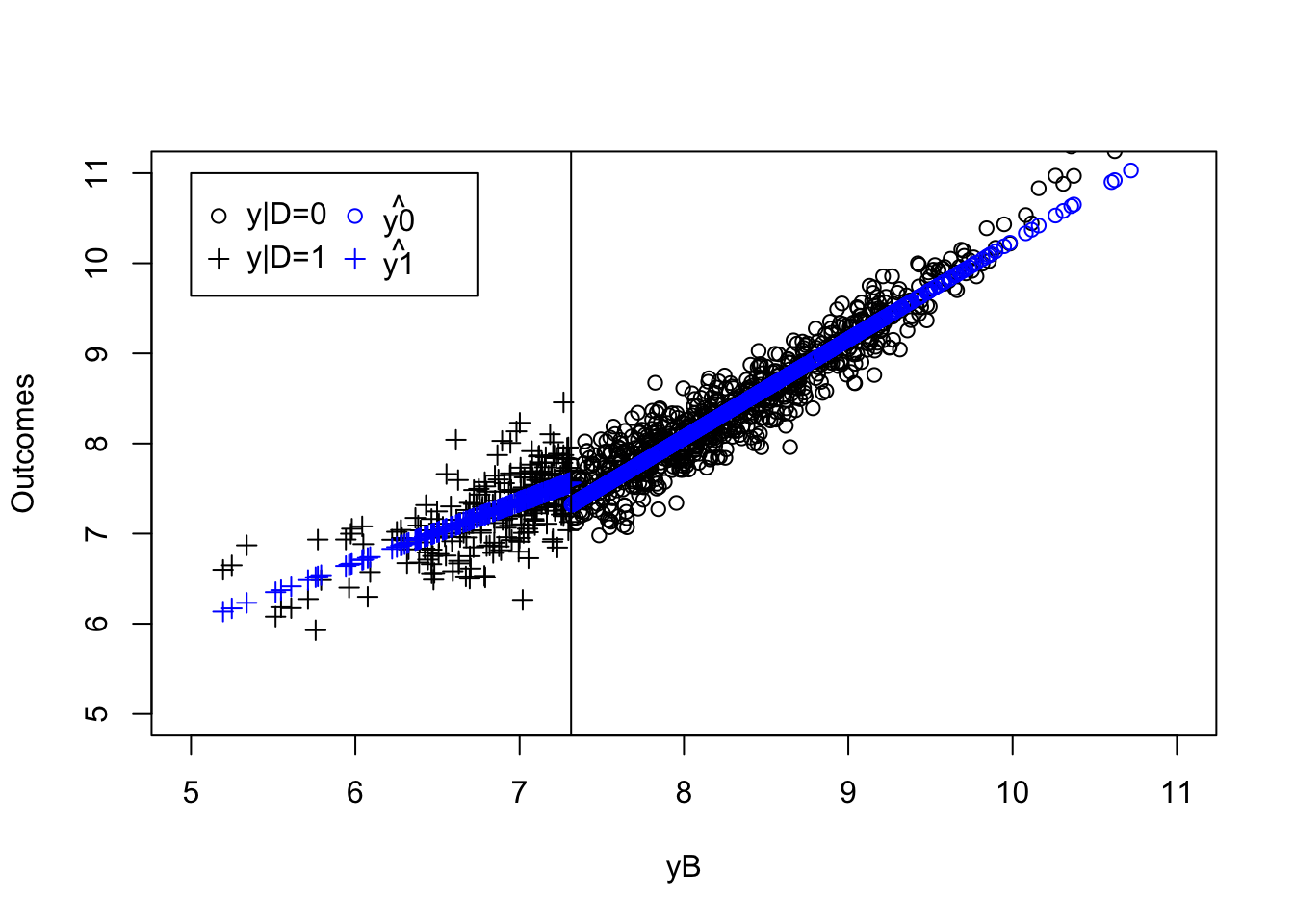

Y <- Y1*Ds+Y0*(1-Ds)Let us now plot the corresponding data:

par(mar=c(5,4,4,5))

plot(yB[Ds==0],y0[Ds==0],pch=1,xlim=c(5,11),ylim=c(5,11),xlab="yB",ylab="Outcomes")

points(yB[Ds==1],y[Ds==1],pch=3,col='black')

abline(v=log(param["barY"]),col='red')

legend(5,10.5,c('y0|D=0','y1|D=1','D'),pch=c(1,3,2),col=c('black','black','red'),ncol=1)

par(new=TRUE)

plot(yB,Ds,pch=2,col='red',xlim=c(5,11),xaxt="n",yaxt="n",xlab="",ylab="")

axis(4)

mtext("D",side=4,line=3)

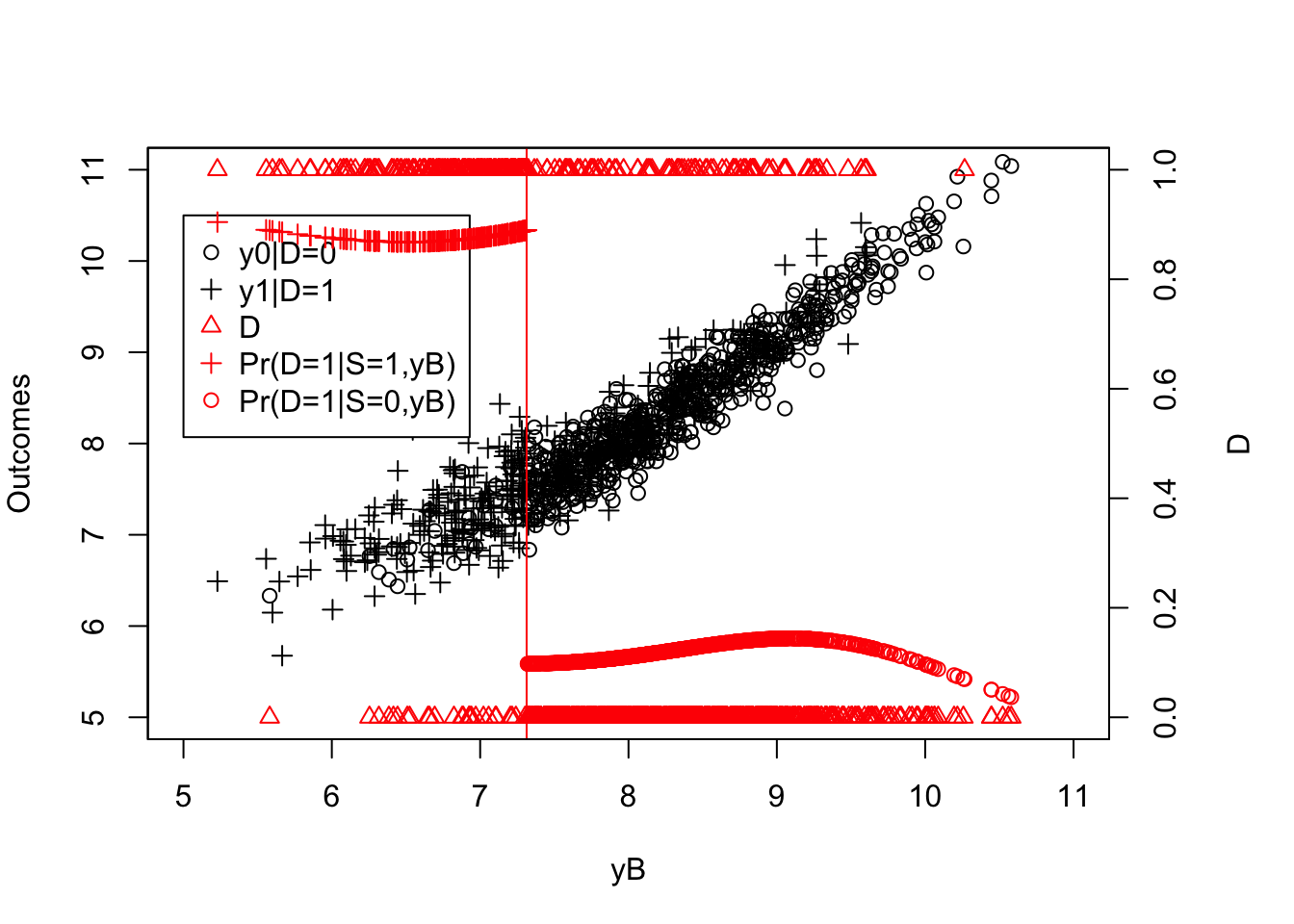

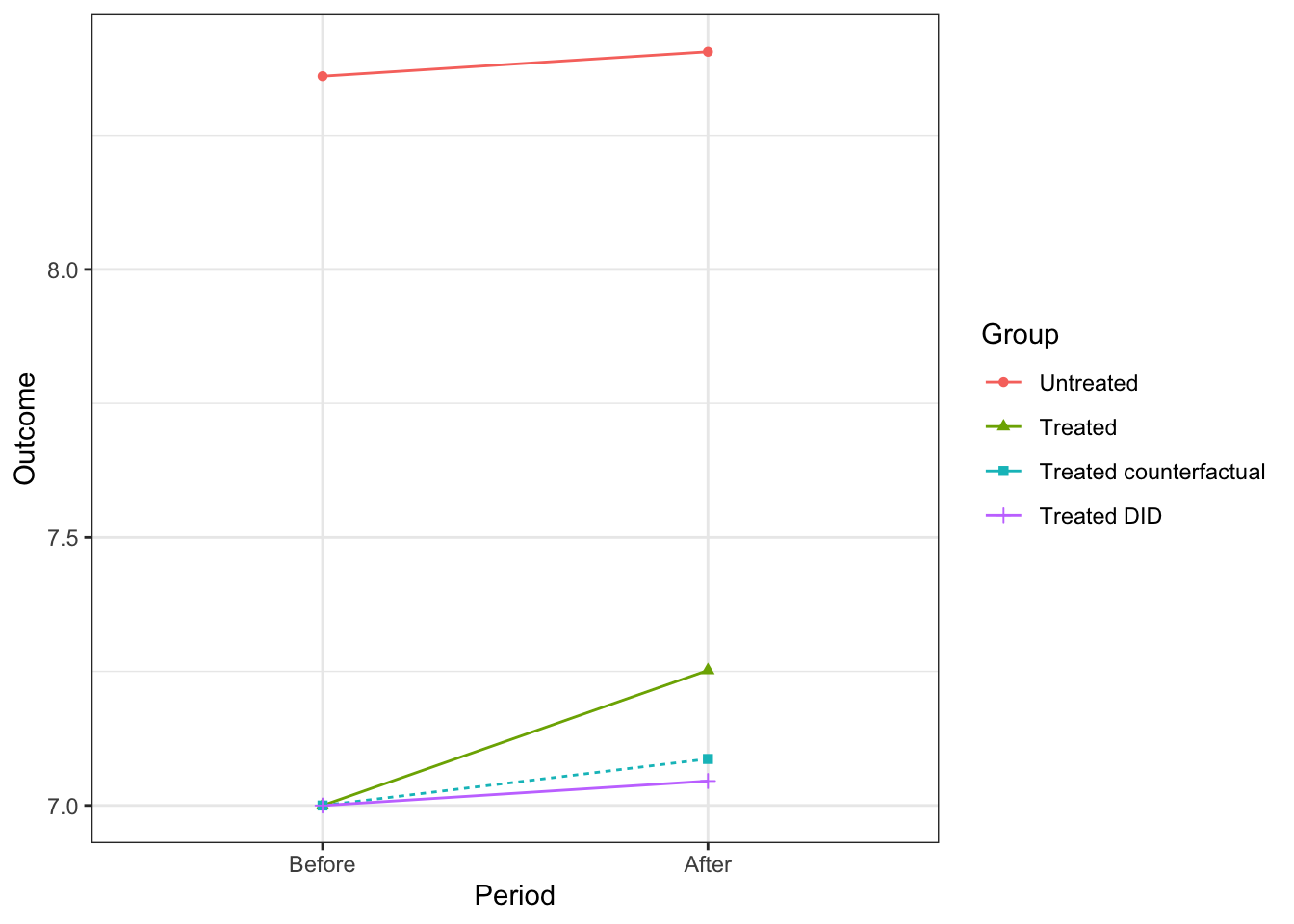

Figure 4.13: Fuzzy RDD

As you can see on Figure 4.13, there are untreated individuals below \(\bar{y}\) and treated individual above \(\bar{y}\). This is why the design is not sharp. But Figure 4.13 fails to convey that the design still exhibits a discontinuity in the proportion of treated. In order to see it, we need to compute an estimate of the probability of being treated conditional on the running variable (\(y_i^B\)): \(\Pr(D_i=1|y_i^B=y)\). It turns out that this quantity is simply the expected value of \(D_i\) conditional on \(y_i^B\). We’ve just learned a cool way to estimate a conditional expectation: Local Linear Regression. Let’s put it to work, using \(D_i\) as the outcome variable now:

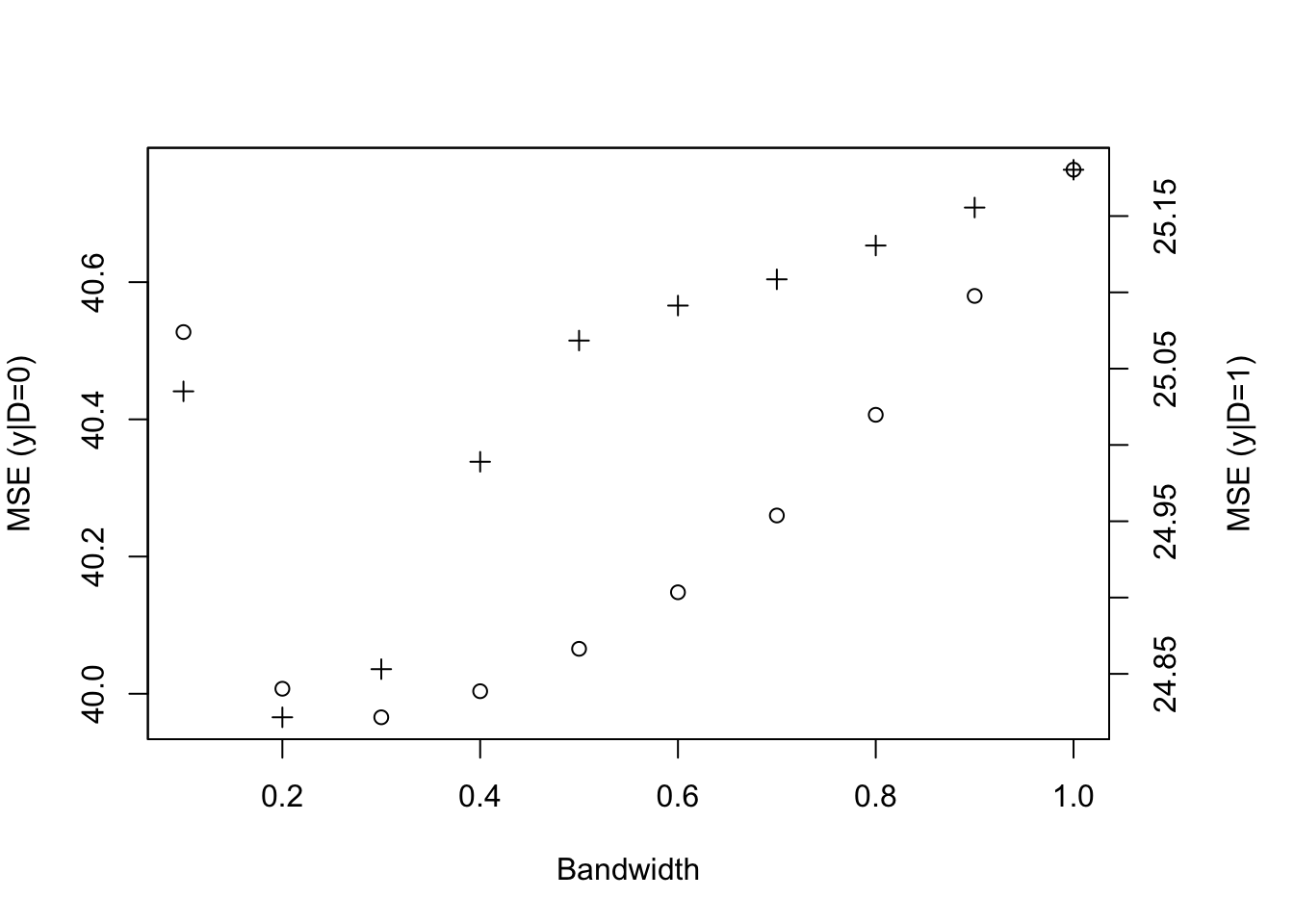

kernel <- 'gaussian'

MSE.grid <- seq(0.1,1,by=.1)

# the instrument in our case is the position relative to the threshoold (or side, hence S)

S <- rep(0,length(yB))

S[yB<=log(param['barY'])] <- 1

# computing optimal bandwidth using Cross Validation

MSE.pr.llr.0 <- sapply(MSE.grid,MSE.llr,y=Ds,D=S,x=yB,kernel=kernel,d=0)

MSE.pr.llr.1 <- sapply(MSE.grid,MSE.llr,y=Ds,D=S,x=yB,kernel=kernel,d=1)

bwD0 <- MSE.grid[MSE.pr.llr.0==min(MSE.pr.llr.0)]

bwD1 <- MSE.grid[MSE.pr.llr.1==min(MSE.pr.llr.1)]

# LLR estoimate of conditional expectation of D on yB

Pr.D0.llr <- llr(Ds[S==0],yB[S==0],yB[S==0],bw=bwD0,kernel=kernel)

Pr.D1.llr <- llr(Ds[S==1],yB[S==1],yB[S==1],bw=bwD1,kernel=kernel) Let’s now plot the resulting estimate:

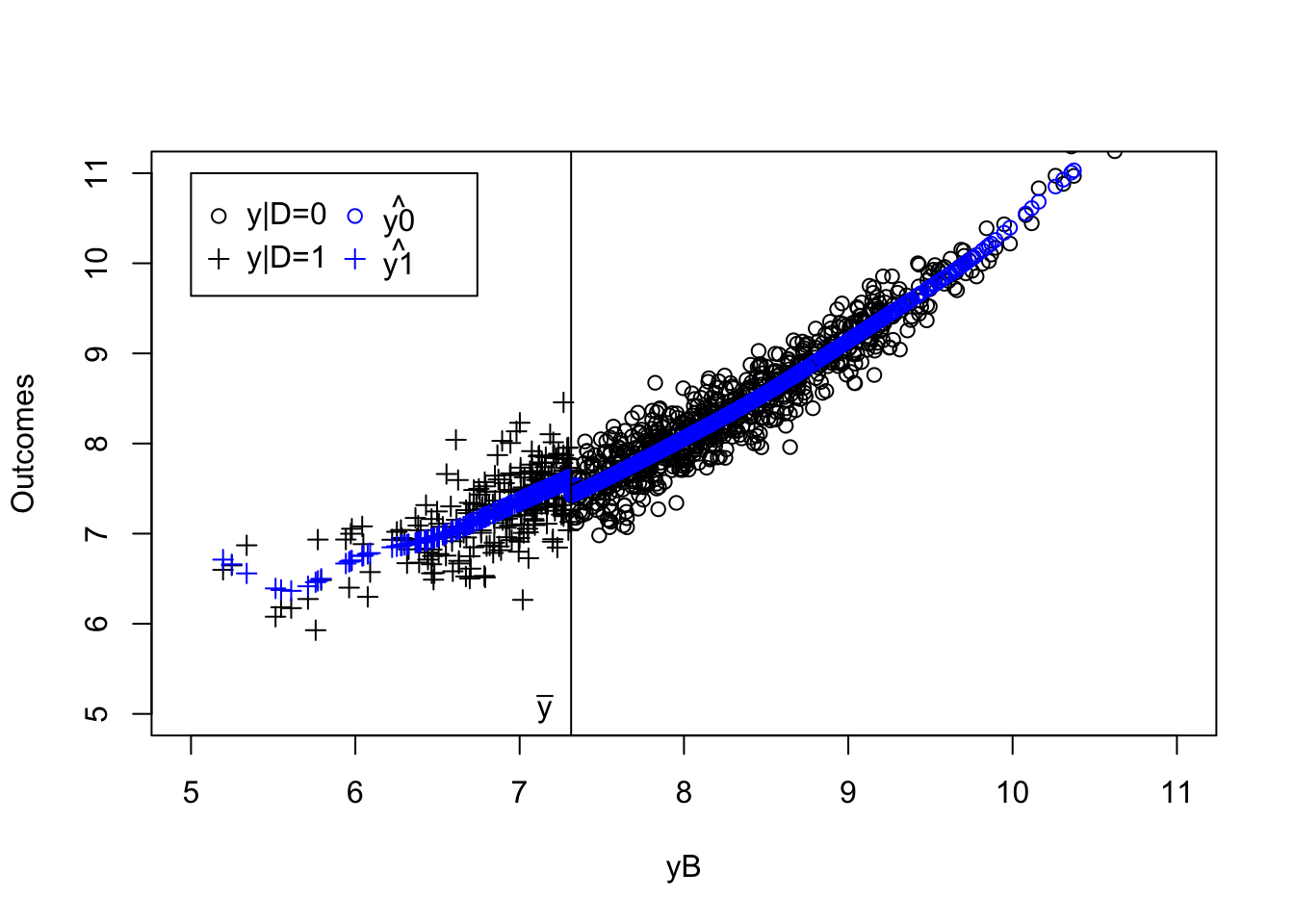

par(mar=c(5,4,4,5))

plot(yB[Ds==0],y0[Ds==0],pch=1,xlim=c(5,11),ylim=c(5,11),xlab="yB",ylab="Outcomes")

points(yB[Ds==1],y[Ds==1],pch=3,col='black')

abline(v=log(param["barY"]),col='red')

legend(5,10.5,c('y0|D=0','y1|D=1','D','Pr(D=1|S=1,yB)','Pr(D=1|S=0,yB)'),pch=c(1,3,2,3,1),col=c('black','black','red','red','red'),ncol=1)

par(new=TRUE)

plot(yB,Ds,pch=2,col='red',xlim=c(5,11),xaxt="n",yaxt="n",xlab="",ylab="")

points(yB[S==0],Pr.D0.llr,col='red')

points(yB[S==1],Pr.D1.llr,col='red',pch=3)

axis(4)

mtext("D",side=4,line=3)

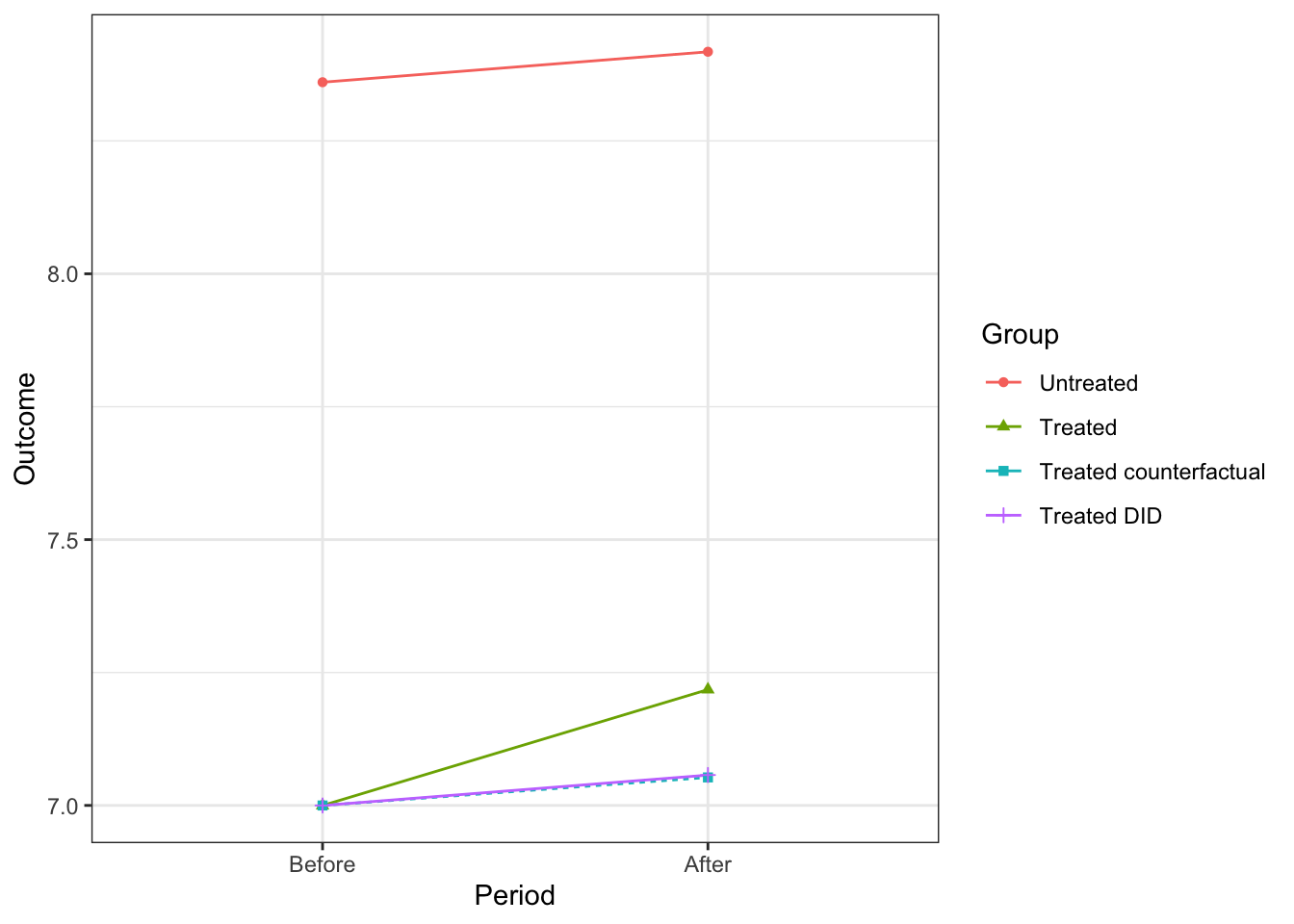

Figure 4.14: Fuzzy RDD with probability of being treated as a function of the running variable

Figure 4.13 now clearly shows that the probability of receiving the treatment decreases discontinuously when crossing the threshold \(\bar{y}\). The decrease is not a move from \(1\) to \(0\) and hence is a clear case of a Fuzzy Regression Discontinuity Design.

Identification in a Fuzzy Regression Discontinuity Design proceeds along the same lines as with Instrumental Variables: either we assume that treatment effects are somewhat independent from Types or we impose that there are no defiers (though some more options are feasible, as we saw in the section on Instrumental Variables).

4.2.2.1.1 Identification under Independence of treatment effects from Types

Let us first define the types in a Fuzzy Regression Discontinuity Design. For that, we define \(D_i(z)\) as the potential outcome of individual \(i\) when \(Z_i=z\). We have (as seems natural now) four possible types of individuals depending on how they react when exogenously moved from one side of the threshold to the other:

- Always takers (\(T^{\bar{z}}_i=a\)) are such that \(\lim_{z\rightarrow \bar{z}^+}D_i(z)=\lim_{z\rightarrow \bar{z}^-}D_i(z)=1\).

- Never takers (\(T^{\bar{z}}_i=n\)) are such that \(\lim_{z\rightarrow \bar{z}^+}D_i(z)=\lim_{z\rightarrow \bar{z}^-}D_i(z)=0\).

- Compliers (\(T^{\bar{z}}_i=c\)) are such that \(\lim_{z\rightarrow \bar{z}^+}D_i(z)-\lim_{z\rightarrow \bar{z}^-}D_i(z)=1\).

- Defiers (\(T^{\bar{z}}_i=d\)) are such that \(\lim_{z\rightarrow \bar{z}^+}D_i(z)-\lim_{z\rightarrow \bar{z}^-}D_i(z)=-1\).

We can now encode the assumption of independent treatment effects:

Hypothesis 4.10 (Independence of Treatment Effects from Types) We assume that treatment effects are independent of Types at the discontinuity:

\[\begin{align*} \Delta^Y_i\Ind T^{\bar{z}}_i|Z_i=z. \end{align*}\]

We are now equipped to prove identification in the Fuzzy Regression Discontinuity Design under Independence of Treatment Effects from Types:

Theorem 4.6 (Identification of TT(z) in Fuzzy RDD under Independence) Under Assumptions 4.8 and 4.10, we have:

\[\begin{align*} \Delta^Y_{RDDWALD} & = \Delta^Y_{TT}(z), \end{align*}\]

with

\[\begin{align*} \Delta^Y_{RDDWALD} & = \frac{\lim_{e\rightarrow 0^{+}}\esp{Y_i|Z_i=\bar{z}-e} - \lim_{e\rightarrow 0^{+}}\esp{Y_i|Z_i=\bar{z}+e}} {\lim_{e\rightarrow 0^{+}}\Pr(D_i=1|Z_i=\bar{z}-e) - \lim_{e\rightarrow 0^{+}}\Pr(D_i=1|Z_i=\bar{z}+e)} \end{align*}\]

Proof. Using the switching equation and Assumption 4.8, we can decompose the numerator of the Local Wald estimator as follows:

\[\begin{align*} \lim_{z\rightarrow \bar{z}^+}\esp{Y_i|Z_i=z}&=\esp{Y_i^1|Z_i=\bar{z},T^{\bar{z}}_i=a}\Pr(T_i=a|Z_i=\bar{z})\\ & \phantom{=}+\esp{Y_i^1|Z_i=\bar{z},T^{\bar{z}}_i=c}\Pr(T_i=c|Z_i=\bar{z})\\ & \phantom{=}+\esp{Y_i^0|Z_i=\bar{z},T^{\bar{z}}_i=d}\Pr(T_i=d|Z_i=\bar{z})\\ & \phantom{=}+\esp{Y_i^0|Z_i=\bar{z},T^{\bar{z}}_i=n}\Pr(T_i=n|Z_i=\bar{z})\\ \lim_{z\rightarrow \bar{z}^-}\esp{Y_i|Z_i=z}&=\esp{Y_i^1|Z_i=\bar{z},T^{\bar{z}}_i=a}\Pr(T_i=a|Z_i=\bar{z})\\ & \phantom{=}+\esp{Y_i^0|Z_i=\bar{z},T^{\bar{z}}_i=c}\Pr(T_i=c|Z_i=\bar{z})\\ & \phantom{=}+\esp{Y_i^1|Z_i=\bar{z},T^{\bar{z}}_i=d}\Pr(T_i=d|Z_i=\bar{z})\\ & \phantom{=}+\esp{Y_i^0|Z_i=\bar{z},T^{\bar{z}}_i=n}\Pr(T_i=n|Z_i=\bar{z})\\ N_{\bar{z}}&= \lim_{e\rightarrow 0^{+}}\esp{Y_i|Z_i=\bar{z}-e}- \lim_{e\rightarrow 0^{+}}\esp{Y_i|Z_i=\bar{z}+e}\\ & = \esp{Y_i^1-Y_i^0|Z_i=\bar{z},T^{\bar{z}}_i=c}\Pr(T_i=c|Z_i=\bar{z})\\ & \phantom{=}-\esp{Y_i^1-Y_i^0|Z_i=\bar{z},T^{\bar{z}}_i=d}\Pr(T_i=d|Z_i=\bar{z}) \end{align*}\]

We also have that the denominator of the Local Wald estimator is equal to:

\[\begin{align*} D_{\bar{z}}& =\lim_{z\rightarrow \bar{z}^+}\Pr(D_i=1|Z_i=z)-\lim_{z\rightarrow \bar{z}^-}\Pr(D_i=1|Z_i=z)\\ & = \lim_{z\rightarrow \bar{z}^+}\left(\Pr(T_i^z=a|Z_i=z)+\Pr(T_i^z=c|Z_i=z)\right)\\ & \phantom{=}-\lim_{z\rightarrow \bar{z}^-}\left(\Pr(T_i^z=a|Z_i=z)+\Pr(T_i^z=d|Z_i=z)\right)\\ & = \Pr(T_i^{\bar{z}}=c|Z_i=\bar{z})-\Pr(T_i^{\bar{z}}=d|Z_i=\bar{z}) \end{align*}\]

Under Assumption 4.10, we have that \(\esp{Y_i^1-Y_i^0|Z_i=\bar{z},T^{\bar{z}}_i=c}=\esp{Y_i^1-Y_i^0|Z_i=\bar{z},T^{\bar{z}}_i=d}=\esp{Y_i^1-Y_i^0|Z_i=\bar{z}}\). This proves the result.

4.2.2.1.2 Identification under Monotonicity

We can weaken Assumption 4.10 and allow for treatment effects correlated with types (which seems highly likely) if we are willing to make stronger assumptions on the distribution of types. As we have seen before with Instrumental Variables, assuming away defiers enables the identification of a Local Average Treatment Effect. Let’s see how this works:

Hypothesis 4.11 (Monotonicity in a Fuzzy Regression Discontinuity Design) \(D_i(z)\) is non-decreasing at \(z=\bar{z}\) (or \(\Pr(T^{\bar{z}}_i=d|Z_i=\bar{z})=0\)).

We can now prove identification of the local average treatment effect:

4.2.2.2 Estimation

For estimation, we can either use the Local Linear Regression Wald estimator or use a simplified version proposed by Imbens and Lemieux (2008). Let’s see how both of them work in practice.

4.2.2.2.1 Estimation using Local Linear Regression

The Wald LLR estimator can be formed as follows, with \(S_i=1\) when \(Z_i\geq\bar{z}\) and \(S_i=0\) when \(Z_i<\bar{z}\) (in the case where \(\lim_{e\rightarrow 0^{+}}\Pr(D_i=1|Z_i=\bar{z}-e) < \lim_{e\rightarrow 0^{+}}\Pr(D_i=1|Z_i=\bar{z}+e))\) (simply revert the values of \(S_i\) if the converse is true)):

- Estimate \(\hatesp{Y^1_i|S_i=1,Z_i=\bar{z}}\) and \(\hatesp{D_i|S_i=1,Z_i=\bar{z}}\) using LLR on the right of \(\bar{z}\).

- Estimate \(\hatesp{Y^0_i|S_i=0,Z_i=\bar{z}}\) and \(\hatesp{D_i|S_i=0,Z_i=\bar{z}}\) using LLR on the left of \(\bar{z}\).

- \(\hat\Delta^{Y}_{WaldRDDLLR}=\frac{\hatesp{Y^1_i|S_i=1,Z_i=\bar{z}}-\hatesp{Y^0_i|S_i=0,Z_i=\bar{z}}}{\hatesp{D_i|S_i=1,Z_i=\bar{z}}-\hatesp{D_i|S_i=0,Z_i=\bar{z}}}\).

- For bandwidth choice: use cross-validation for each estimation.

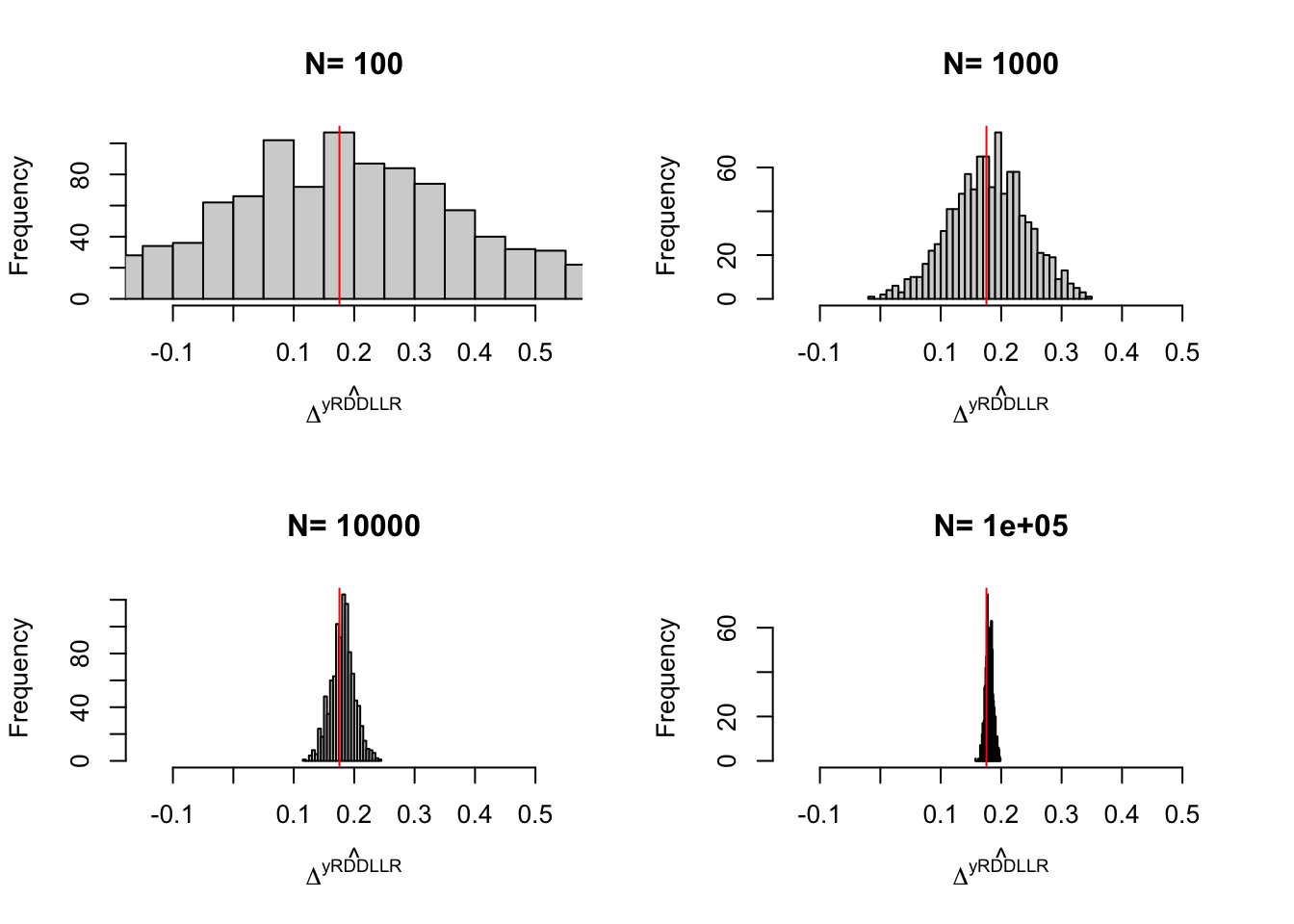

Example 4.22 Let’s see how this works in our example. Note that we have to revert the definition of \(S_i\) since the highest treatment probability is below \(\bar{y}\).

# bandwidth choice

MSE.llr.S0 <- sapply(MSE.grid,MSE.llr,y=y,D=S,x=yB,kernel=kernel,d=0)

MSE.llr.S1 <- sapply(MSE.grid,MSE.llr,y=y,D=S,x=yB,kernel=kernel,d=1)

bwyS0 <- MSE.grid[MSE.llr.S0==min(MSE.llr.S0)]

bwyS1 <- MSE.grid[MSE.llr.S1==min(MSE.llr.S1)]

# LLR estimation

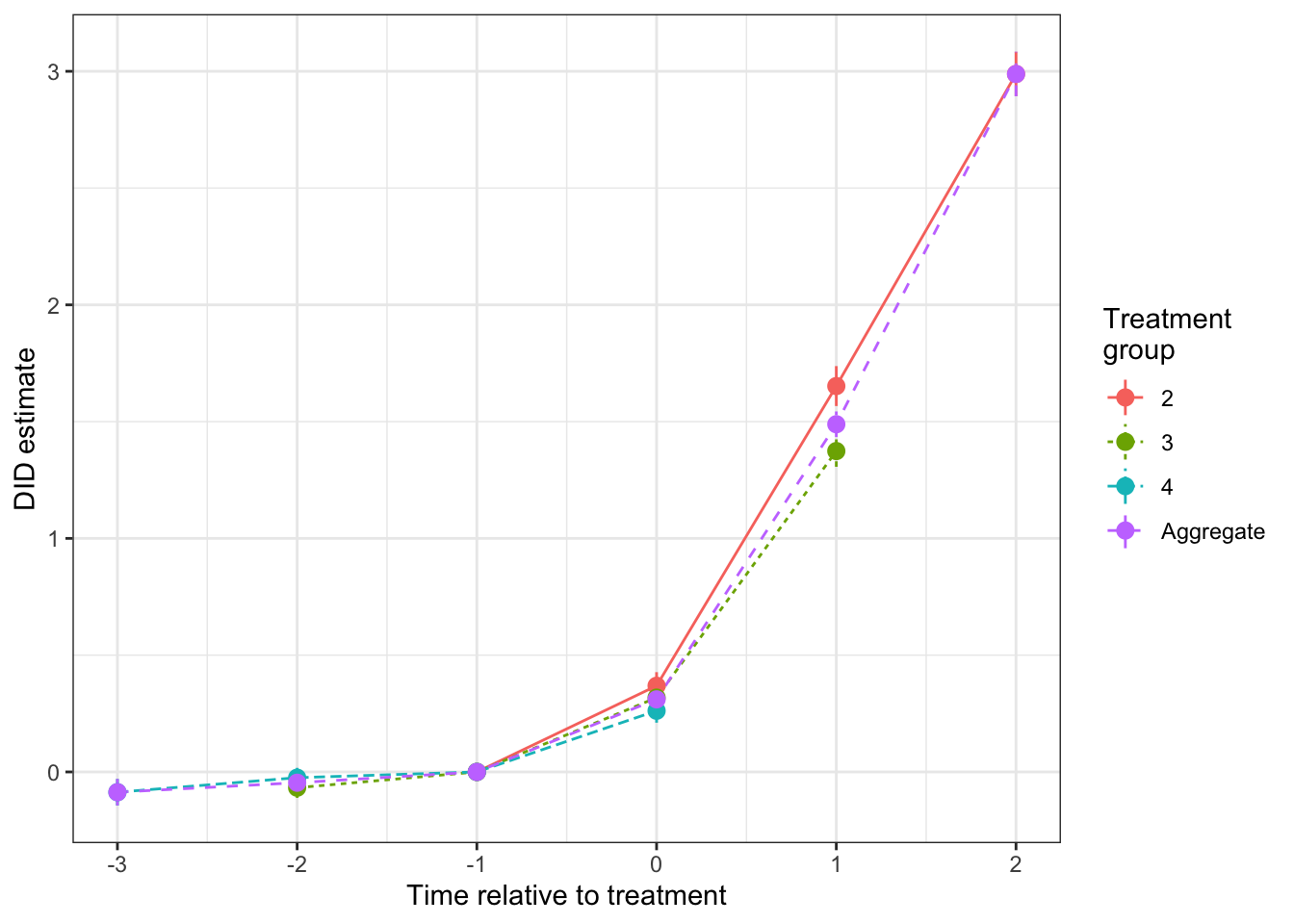

y.S0.llr <- llr(y[S==0],yB[S==0],yB[S==0],bw=bwyS0,kernel=kernel)